Google is undoubtedly the world’s most sophisticated search engine today, yet it’s nowhere near the true information companion we seek. This article explores the future of human-computer interaction and proposes how search engines will learn and interact with their users in the future.

“Any sufficiently advanced technology is indistinguishable from magic.”

Arthur C. Clarke

Amy’s Conversation With Google

Amy wakes up in the morning, makes her coffee and decides to ask Google something that’s been on the back of her mind for a while. It’s a very complex, health related query, something she would ask her doctor about. Google and Amy have had conversations for a few years now and know each other pretty well so the question shouldn’t be too much of a problem.

Observe the dialogue, and try to think about the background processes required for this type of search to work.

Hi Google.

Good morning Amy, how’s your tea?

Coffee.

You are having coffee again. It’s been 14 days since last time you’ve had one. Have you had your breakfast yet?

No.

You asked me to remind you to have breakfast in the morning as you’re trying to be healthy. Should I wait until you come back?

No. Thank you. I wanted to talk to you about something.

Great, I enjoy our conversations Amy. How can I help?

I’ve been taking antibiotics for a week now and wondering how long I need to wait before I can do my urea breath test.

Amy, are you asking me for more information about antibiotics and urea breath test?

No, Google. I am asking you about waiting time between taking antibiotics and the test.

I have information about the urea breath test linked with waiting time; the level of confidence is only 20%. Can you tell me more?

My antibiotics were: Amoxil, Klacid and Nexium. I took four tablets twice per day. They’re for H Pylori.

Thank you Amy. Does Nexium Hp7 sound familiar?

Maybe. Can you show me some pictures.

Just a moment….. Here.

Yes. That’s my medicine.

Nexium Hp7 is used to treat H Pylori. Recommended waiting time between end of treatment and the urea breath test is four weeks. I am 80% certain this is the correct answer.

Show me your references.

Here.

Thanks. Show results from Australia only.

Result confidence 75%

Remove discussion results.

Result confidence 85%

Show worldwide results.

Result confidence 95%

Google, I stopped my antibiotics on the 24th of November, what date is four weeks from then?

22nd of December.

Book a doctor’s visit for that date in my calendar.

Amy, that will be Saturday. Do you still wish me to book it for you?

No. Book it for Monday.

Monday the 17th or 24th?

24th.

Would you like to set a reminder about this appointment?

Yes. Set reminder for tomorrow.

Reminder set. Amy, are you going to have your breakfast now?

Haha, yes. Thanks Google.

Dialogue Interpretation and Background Information

The above dialogue may seem rather straightforward but for Google to be able to achieve this level would be a matter of serious advancements in many areas including natural language processing, speech recognition, indexation and algorithms.

Conversation Initiation

By saying “Hi Google” Amy activates Google conversation.

Verbal Information Extraction

Google responds with “Good morning” knowing it’s 7am. It also records the time Amy has interacted with it for future reference and to build a map of Amy’s waking and computer interaction habits including weekdays, weekends and holidays. Google also makes small talk in order to keep tabs on Amy’s morning habits. It assumes she is having tea based on previous instances. Amy says one word “coffee”, but Google links the concept with its previous question “how’s your tea” and replaces “tea” with “coffee” as an entry for Amy’s morning habits. Google also feeds Amy with an interesting bit of information (14 days of no coffee). Since Amy hasn’t asked anything specific yet Google uses the opportunity to check on a previously established reminder about remembering to have breakfast in the morning. When Amy says she hasn’t had one, it politely suggests it can wait until Amy returns.

Query Initiation

Amy gets to the point and tells Google she has a complex query for it.

Understanding User Query

Google readily responds and eagerly awaits more data. It stimulates Amy to further conversation by stating that it “enjoys” their chats.

Amy’s initial statement is vague and Google doesn’t have much data to work with.

“I’ve been taking antibiotics for a week now and wondering how long I need to wait before I can do my urea breath test.” She knows Google will need further information and expects a follow-up question.

In the follow-up question Google recognises this is a health enquiry and isolates two key terms: “antibiotics” and “urea breath test” offering to give independent information on both. It’s the best it can do with the current level of interaction.

Amy clarifies that her query is more about the time period required to wait between taking antibiotics and her breath test.

Google is able to find results related to “time”, “antibiotics” and the “urea breath test”. It is confident about each result separately but not of their combination in order to give what Amy may find a satisfactory answer. It knows that and it tells Amy that it’s only 20% confident in it’s recommendation. It prompts Amy for more data.

Amy specifies the name of antibiotics she’s been taking and their purpose.

Term Disambiguation

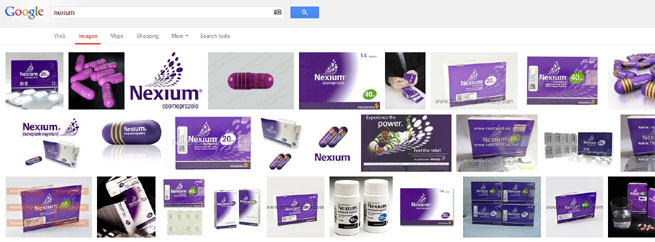

At first Google spells “Panoxyl, Placid…” but when the third term “Nexium” is introduced it disambiguates the other two and the terms are quickly consolidated to “Amoxil, Klacid, Nexium”. Once Amy mentioned “H. Pylori.” Google is able to seek further information and discovers a high co-occurrence of a term “Hp7”. It asks Amy whether it’s significant in her case as a final confirmation.

Multi-Modal Search

Amy is unsure and wants visual results.

Google shows image search results for “Hp7” and Amy realises that’s the medicine pack she’s been taking and confirms this with Google.

The Answer

Google is now able to look up the “time” required to wait. It finds all available references and sorts them by relevance and trust. It gives Amy the answer with 80% confidence. The recommended waiting time is four weeks.

Human Result Verification

Amy decides to verify this by asking Google to show its references and performs a few search filters in order to establish higher confidence level. At 95% she is happy and decides to engage Google as a personal assistant by asking what date will be four weeks after she stopped taking her antibiotics.

Personal Assistant

Google returns the date and Amy asks it to make a calendar entry. Google is aware this is on a Saturday and wants to confirm is that’s still OK. When Amy suggests a “Monday” Google disambiguates by offering two alternative dates and only then books in the reminder. Google doesn’t mind being a personal assistant as this feeds it more valuable data and enables it to perform better in the future.

Back to Conversation

Since no further conversation takes place Google takes the opportunity to remind Amy to have her breakfast.

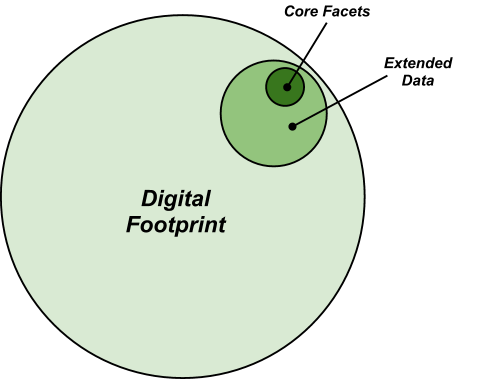

Human Facets

In order to truly “understand” an individual, a search engine must build a comprehensive framework, or a template, of a user which to gradually populate and update with a stream of available data. A base model of a human user may consist of a handful of core facets, hundreds of extended essential properties and thousands of additional bits of associated data.

Core facets would contain the essential user properties without which a search engine would not be able to effectively deliver personalised results (e.g. Amy’s Google login, language she speaks or the country she lives in).

Extended data may contain more granular or specific bits of data which enrich the understanding of the user including their status, habits, motivations and intentions (e.g. Amy’s browsing history, her operating system, gender, content she wrote in the past, her emails as well as her social posts and connections).

Digital footprint may consist of user’s activity trail, timestamps and other non-significant or perishable user data. Footprint creation is typically automated or a by-product of user activity on-line (e.g. time and date of Amy’s Google+ posts). A portion of one’s digital footprint could be disposable while the rest may aid in making sense of the extended data (e.g. Amy’s Facebook timeline).

Data Retrieval

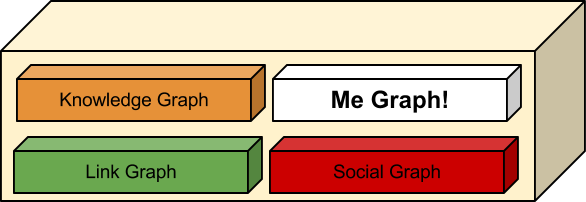

Google indexes a rather small fraction of the web and continuously attempts to solve the problem of the “Deep Web”[1]. After Gmail success, Google+ was that next step forward[2] in digging deeper[3] than just the Surface Web. Both are very much about access to user’s data which would ordinarily be hidden away.

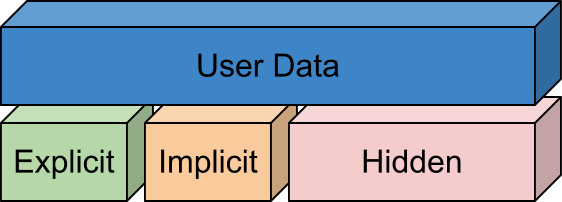

Amy’s data breaks down into three basic segments by their accessibility to the search engine:

Explicit data is the freely available information which may include her public profile information, social connections, +’s, shares[4], authored content, liked videos, public reviews and similar items.

Implicit data represents inferred connections in the “index of Amy” similarly to how Google+ builds an implicit social graph by observing what content she shares and comments on.

Hidden data stands for a vast amount of information inaccessible to Google (or purposely disallowed by Amy, this could be her date of birth, phone number or street address).

Available Data

“Google policy is to get right up to the creepy line and not cross it.”

Eric Schmidt, Google.

Data available to a search engine could include any number of on-line and offline techniques tied in with the ways users interact with Google. We’ve identified a random list of possible methods[5], techniques and data sets Google could access in order to further enhance Amy’s search experience:

• Search history

• Language

• Browser data (bookmarks, navigational paths, visited websites)

• Login locations, GEO-IP, places lived, geo tagging / explicit check-ins

• Search preferences, search behaviour (filters, patterns)

• Social data (interactions, active times, close people, content)

• Calendar content (Events, holidays, meetings, birthdays, anniversaries)

• Purchasing behaviour (on-line shopping)

• App download and usage metrics

• Human-metrics (BMI, weight, temperature…etc)

• Mood and sentiment measuring

• Todo lists

• Interactions with ads

• Payment system usage

• External services used via the search engine’s own OAuth

• Income, health history, jobs, family status (kids, spouse, relatives)

• Reading habits

• Screen resolution, device(s), operating systems, browsers used

• Be an ISP, DNS, email provider

• RFID, NFC

• Postal items received

• Explicit survey

• Connection Speed

Many of these are currently locked away for privacy reasons, some are technologically not feasible and others may in fact already be used for personalisation of her search results.

The Missing Link

A perfect search engine is the “one that understands exactly what you mean and gives you exactly what you want.”

Larry Page, Google

Google’s current way of information extraction is largely a background process. Amy gives much of her data (used for personalisation) without thinking about it. The rest is the bits and pieces she specified in various Google accounts including her search profile. I wouldn’t call it creepy just yet, but it’s definitely mostly done in the background with a light “big brother” vibe to it.

The Solution

The solution is allow Amy to deliberately feed Google information about herself. To be able to do this effectively she would need to have series of natural conversations with Google, just like two friends would get to know each other over time.

Current State

Natural language is still the most comfortable way to communicate for majority of users and glimpses of its brilliance we see in Google Now. Amy can already ask Google simple questions by talking to her tablet or phone with answers returned by voice and device display. Although Google has done much research[6] in this field we’re yet to see the search engine provide meaningful verbal comprehension or feedback.

Advantages and Disadvantages

Verbal input is not there to replace all other forms of data input but to provide Google with additional information in what feels like a natural conversation. This gives Amy the ability to easily update on Google on her current mood, health status, music preferences, eating habits… etc.

By using Google as her organiser (or personal assistant) Amy feeds it with additional insights into travel, meetings, personal events and improving the quality of all future suggestions and recommendations. The whole “conversation model” would significantly improve Amy’s interaction with the “machine” and would at last give her a break from all this hardware she’s attached to.

Talking to her Google would be something Amy would do in a private and rather quiet environment. She utilises both “work mode” and “home mode” on her account but noisy environment such as her busy office make it difficult to issue coherent voice commands.

The Index of One

Collecting information represents just one step in search engine’s attempt to comprehend the user. Making sense of collected information, however, is not a completely foreign concept to Google. The idea is to build and maintain an index of a single individual.

Breaking The Bubble

One risk associated with the “index of Amy” is the “bubble effect”[7] where she is continuously presented with more of what she wants or already knows. For example Amy is a coffee connoisseur and is now more likely to see coffee related results when she searches for “Java” than somebody who is interested in travel and geography and looking for information about an island in Indonesia.

Introduction of random non-personalised might be a good way to keep a stream of fresh data flowing in Amy’s results and answers.

Google’s Evolutionary Timeline

Level 1: Semantic Capabilities (2012-2015)

Currently Google is capable of rendering basic data available and making sense of rudimentary knowledge nodes. In the next few years we will see Google’s search results to be answering our questions more efficiently and start to see the first glimpses of something “smart”.

- Personal characteristics and behavioural patterns

- Connections, affiliations, status and memberships

- User intentions and preferences

- Temporal, time-sensitive data

Level 2: The Smart Machines (2015-2030)

Once Google refines the algorithmic treatment of single-index it will be able to:

- Predict, suggest, influence and aid future choices

- Research and summarise findings for the user

- Perform background investigative work

- Assist in self-paced learning

- Act as a virtual assistant

- Content curation

Level 3: Artificial Intelligence (2030+)

- Act as a representative

- Join the discussion

- Create new content

- Teach a subject

Level 4: Augmentation (unknown)

- Conversation stops, search stops

- We simply know what we wish to know

If the above sounds too closely aligned with the proposed technological singularity[8], it might just be true now that Ray Kurzweil has joined Google[9] as an engineering director with focus on machine learning and language processing.

The Future of Search and Knowledge

One of the biggest failures of classic science fiction and futurism is short-sightedness in relation to how information technology transformed our society. Now that we’ve experiencing the transformation we’re also discovering the biological bottleneck.

Human evolutionary path and series of adaptations have so far been gradual. As new technologies come on stage we attempt to fit them to our organic pattern. Early adopters and information addicts, for example, enjoy multiple streams of information in real-time but what is the true limit of human ability to effectively process information? We already seem to be hitting the brick wall.

In the far future search for information is deprecated in favour of instantaneous knowledge. Knowledge processing and the ability to absorb information quickly will eliminate the biological bottleneck and allow humans to keep up with exponentially advancing technology.

Here are a few of my favourite quotes from an insightful interview / article on The Next Web:

“It’s possible we will look to create more communication tools that will advise us how to reason, and advise us how to feel.” Jonathan MacDonald

“I see touchscreens partially as an intermediate interface technology. Scientists worldwide are working hard to develop Natural User Interfaces with the ultimate aim of making computing and communication ubiquitous as a natural part of our lives.”, David Lemereis

“I’m sure we’ll see brain-to-machine communication where we control various computer types with thinking – that is specific brain activity. In our car, our home and so on.”, Lone Frank

“The bottleneck today isn’t new mediums of communication–video, images, sound, and text serve us very well–but that we are limited in having continuous access to these mechanisms of communication.”, Axel Arnbak

Ongoing Research

Google Research has a whole section on speech processing with 48 publications and the key people at Google are believers in natural human-computer interaction. Their SVP, Amit Singhal has made it very clear[10] that he dreams of having a talking computer just like the one from Star Trek. Even the CEO, Larry Page has a soft spot for the futuristic concepts[11]. He is a big fan of Google Glass and the self-driving cars.

Google Glass AFP / Getty Images

“At Google, we’re able to use the large amounts of data made available by the Web’s fast growth. Two such data sources are the anonymized queries on google.com and the web itself. They help improve automatic speech recognition through large language models: Voice Search makes use of the former, whereas YouTube speech transcription benefits significantly from the latter.” Source

Highlight on Independent Advancements in Basic AI

A key individual in advancement of machine-based content curation and authoring is Philip M. Parker who not only made an application which is capable of curating content but has also published and sold[12] the books created by it. Here is a video overview of his software and a link to his patent.

Appendix: Impact on Business

Google is an information company and their job is to provide answers to our questions. If your business is based on the same model, then sooner or later you will be out of the game. Google has no ambition to show more search results in their search results. This is not being evil, it’s being efficient and giving your users the best answer, and quickly.

Who is at risk?

Any business which provides answers to questions including, but not limited to:

- Data, product and service aggregators

- Flight, hotel, car rental search and booking sites

- Price comparison, weather reports, sports results

- Event calendars and various other data-driven websites

- Domain information, IP address lookups, time/date and currency conversion.

Who is safe?

Original producers or content, products, tools and services will continue to enjoy the benefits of organic search traffic. One thing to watch out for is that your data is not detached from your brand and used to present to your audience directly through search results. Some already fear this might be taking place through rich snippets and the knowledge graph.

In Between

At this stage Google is incapable of providing well-organised, curated content or meaningful advice or opinion. In the next ten years or so we will still see human-generated content as dominant producer of this type of information and advice. The decade after that, however, will challenge this and we will see artificial intelligence assume a more meaningful role in content curation, opinion and even advice.

References

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211

Awesome Dan, using your futurist skills to the max. With that image I wondered if you might end up quoting Asimov 🙂

It was a simple choice, either Asimov or Clarke! 🙂

The implication is that Google will one day become like a chatter bot in its interactivity? That would be cool, but there are big obstacles to that. The biggest probably being the approach in written language coding itself. Currently I see programmers and engineers rely on outdated linguistic theories to aid them in their quest to create a near perfect interactive bot. Structuralism and formalism – that’s ok, but it’s not all there is to language.

I see Google Translate as a prime example. It’s so easy to confuse it. Type more than 2 words or phrases and chances are high that there will be an error. The bots that code the words and phrases structure them as elements with context, but those who programmed them chose the wrong elements! Basically, they treat letters as elements (which are made of smaller elements xD), then compute relationships between letters to mark differences between words. Next step, issue requests to retrieve data about the word to create a context that may be meaningful to a user. That data is again structured in the same way. Basically all they code is ‘images’ of a word, its form, but nothing else – structure does not correspond to natural language and something more like semantics is far far away. You say 2050…that’s wishful thinking with the current approach. The way coding bots is going, the goal seems to be: memorize every linguistic combination as form, and then use algorithms to ‘guess’ with a high chance of success what the human wants, had intended or thought of. I mean that’s what search engines do right? They guess, and the best search engine, Google, is better at guessing since it contains remarkably more information in its databases than others.

But, it doesn’t have to be like that. You don’t have to program bots to guess! It is possible to structurise programmed words in such a way as to correspond to natural ones. Most of natural language has a fixed finite structure that can be properly quantified. That approach would be finalized in a MUCH shorter amount of time and more importantly it will mirror human language, contain its full etymology and later just be updated with new info when new meanings get generated in the real world.

In natural language ‘apple’ is a heavyweight, and ‘this’ is a grain of sand. ‘This’ is neither polysemic nor complex. It is just 1 element! Sure it has 4 letters in written and 3 sounds in spoken language. But conceptually it is 1. When broken down to simpler elements the word ‘apple’ has several HUNDRED conceptual 1’s. That is how each representation of a word needs to be structured if bots are to advance and get closer to human understanding. So basically when you see ‘this’ it should contain little information in itself, while the word ‘apple’ should be like a darn ocean of information. Those words need to be ALWAYS and to EVERYONE like that (some rich in info, others poor in info). And that info needs to be carried and copied EVERYWHERE. But for that to work you need: a new way of building information hardware and correspondingly software. Current hardware and software are nowhere CLOSE to producing anything as closely sophisticated as an AI. Change of paradigm – required, otherwise ‘this’ will still confuse many like it did some few sentences above :).

“You say 2050…that’s wishful thinking with the current approach.”

I am counting on the approach to evolve! 🙂

This reminds me of a passage in a book called God’s Debris about the internet being the omnipotent “God” that knows and connects us together. Although it’ll be cool to see, I still want my results fast! Inb4 Kevin from The Office https://www.youtube.com/watch?v=OFiHaMdPPZE

Love the Stupid T-Shirt