If you’re running a small-to-medium sized website, visualising website architecture isn’t difficult. What do you do if your website exceeds 100,000 or even 1,000,000 pages?

Visio, PowerMapper, SmartDraw and similar applications won’t do the trick. They produce great visualisations in a relatively short time period, but they fail/break/time out when facing websites such as eBay and Amazon. Some webmasters prefer desktop website visualisation software which, left for days could in theory scan the whole site. The problem is that even if the tool itself can handle the size of the site, your machine will eventually run out of RAM. It happened to me.

What’s the solution?

You can handle the whole process effortlessly by employing the following tools:

80legs

The best way to describe 80legs is to say it’s a mini Google. Imagine your own server farm with massive crawling capacity which you can employ to crawl the web, process pages and analyse results. Faced with a very large website, 80legs will scale up and delivery results quickly.

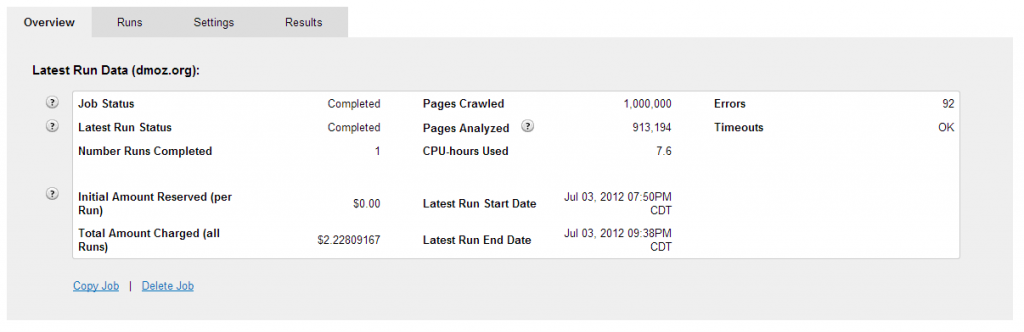

To illustrate what I mean I’ll show you some statistics of when I crawled DMOZ:

One million pages crawled for $2.22 in just a few hours. How good is that?

[Skip the technical details]

Getting Started

Note: This section includes official 80Legs help instructions.

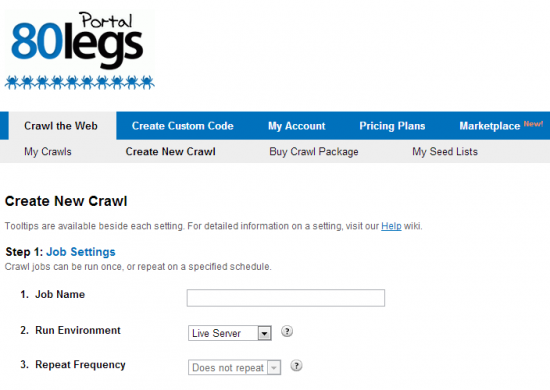

Sign up for 80legs. It’s free and you only need to start paying when you’re ready for a serious crawl. Once logged in click on “Create New Crawl”, enter the job name select whether you want to run a test job or a live server crawl. The “Live Server” will run the crawl on the 80legs platform. The “Test Sandbox” lets you test out a crawl on a limited number of URLs in a test environment. If you are running custom code, the sandbox environment will provide output from your code to allow you to test it.

You can schedule crawls to repeat and run at a specified time each day, week, or month. Crawls will be queued at the start time specified and will normally start running within 2-3 minutes. This last option is available only to paying customers.

Crawl Settings

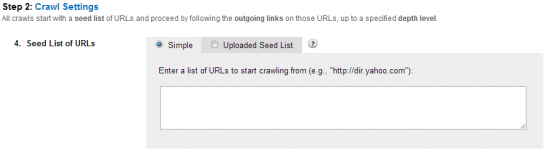

All 80legs crawls must start with a seed list of URLs to crawl from. The links on those URLs are then traversed to continue crawling. Note that all URLs in the seed list are crawled at the same time, in parallel. This list is currently limited to a maximum of 50 URLs. For larger seed lists, use the My Seed Lists section to upload files of up to 10 GB in size (this may be lower based on the Pricing Plan you are subscribed to).

Your seed-list.txt may look like this:

http://www.npr.org/blogs/mycancer/

http://gruntdoc.com/

http://www.pastaqueen.com/halfofme/

http://medbloggercode.com/

http://www.thehealthcareblog.com/

http://aweightlifted.blogs.com/

http://www.bestdiettips.com/

http://www.diet-blog.com/

http://www.viruz.com/

http://www.starling-fitness.com/mail/

http://hundreddayheadstart.com/

http://www.myfitnesspal.com/blog/mike

http://myweightlossjournal.wordpress.com/

http://insureblog.blogspot.com/

http://journeyoffitness.blogspot.com/

http://justanotherweigh.blogspot.com/

http://livinlavidalocarb.blogspot.com/

http://nutritionhelp.blogspot.com/

http://mariaslastdiet.typepad.com/

http://www.healthcentral.com/diabetes/c/110/

To sure make your crawls run faster right from the beginning, ensure that your seed list contains at least a dozen URLs. Since pages are crawled in parallel, this will ensure that there are enough URLs available to crawl at top speed right from the beginning.

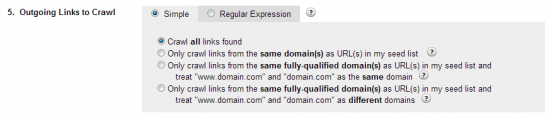

80legs crawls by following the outgoing links it finds on URLs from your seed list. You can configure which outgoing links 80legs should follow as it is crawling. Keep in mind that a page may have links to many other sites or pages on the Internet that are unrelated to the URLs in your seed list.

same domain(s)

Starts with a URL in your seed list and follow links that are from the same parent domain as that URL.

If your seed list has two items, http://test1.msn.com and http://test2.yahoo.com, the crawl starting from http://test1.msn.com will only follow links that contain the parent domain “msn.com” and the crawl from http://test2.yahoo.com will only follow links that contain the parent domain “yahoo.com”.

same fully-qualified domain(s)

Starts with a URL in your seed list and follow links that are from the same fully-qualified domain as that URL. Note that www.domain.com and domain.com will be treated as the same domain during the crawl.

If your seed list has two items, http://test1.msn.com and http://test2.yahoo.com, the crawl starting from http://test1.msn.com will only follow links that contain the fully-qualified domain “test1.msn.com” and the crawl from http://test2.yahoo.com will only follow links that contain the fully-qualified domain “test2.yahoo.com”.

same fully-qualified domain(s)

Starts with a URL in your seed list and follow links that are from the same fully-qualified domain as that URL. Note that www.domain.com and domain.com will be treated as different domains during the crawl.

If your seed list has two items, http://test1.msn.com and http://test2.yahoo.com, the crawl starting from http://test1.msn.com will only follow links that contain the fully-qualified domain “test1.msn.com” and the crawl from http://test2.yahoo.com will only follow links that contain the fully-qualified domain “test2.yahoo.com”.

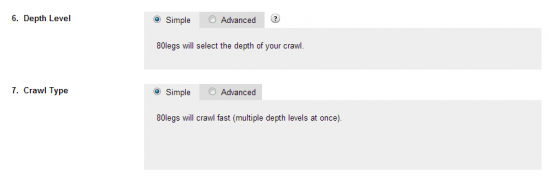

Depth & Crawl Type

When 80legs is crawling a site, it maintains a sense of which “depth level” a page is on. The URLs in your seed list will be at depth level 0. The pages linking from these URLs will be at depth level 1. The pages linking from these pages will at depth level 2. And, so on…

A page may be encountered multiple times at different depth levels while crawling. 80legs will only crawl the page the first time it is encountered and there is no guarantee that the page will be crawled at the same depth level in subsequent crawls.

Crawl Type: Advanced

The “Fast” option crawls multiple depth levels at a time. Your crawl may finish without completely covering all pages in a depth level. This is the recommended option and will suffice in most cases.

The “Comprehensive” option crawls 2 depth levels at a time and also ensures that all pages are crawled in each depth level. This may crawl much slower than the “Fast” option above and is not recommended for general crawls.

The “Breadth-First” option may crawl very slowly. It is a true breadth-first crawl, as it waits for each depth level to complete before proceeding. There are some instances where this option may be useful, but it is not recommended for general crawls.

Additional Settings

You can limit the size of your crawl by setting this field. The crawl will stop when it hits this maximum. Live crawls currently have an upper limit based on the Pricing Plan you are subscribed to. You can increase this limit by upgrading your plan. Sandbox crawls are restricted to 100 URLs.

Max Pages Per Domain is the maximum number of pages that will be crawled for a single domain.

If you have http://msn.com and http://yahoo.com in your seedlist and the value is set to 100, then only 100 maximum pages will be crawled from http://msn.com and 100 maximum pages will be crawled from http://yahoo.com.

80legs can use MIME type headers to determine whether a page should be crawled or not. If a page is not one of the specified MIME types, then it will not be crawled. Note that HTML pages (including dynamically generated pages, such as .php, .aspx, etc.) and all other textual content fall under the “text” MIME type.

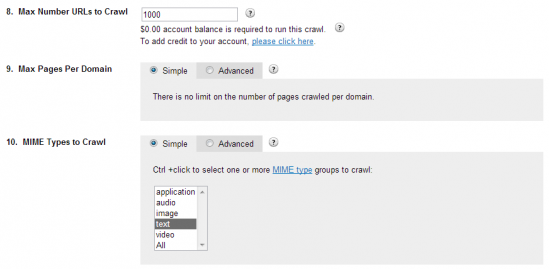

Analysis Settings

The true power of 80legs comes from being able to let you run analyses on pages while crawling. As a rule, all 80legs crawls must run some kind of analysis. These are the main types of analyses you can run:

80apps: 80apps are pre-built apps that can be used to perform a variety of different things on pages as they are crawled. Many 80apps are included free with a Pricing Plan. You may also purchase additional 80apps from the Marketplace.

Custom Code (Custom 80app): To perform a custom analysis, you can write your own Java code to perform any analysis you wish. You can also use custom code to control which pages are crawled.

Keyword Matching: This is a built-in analysis method. You supply a list of keywords to search for on your crawl and each page is matched against each keyword.

Regular Expressions: This is a built-in analysis method. You supply a list of regular expressions to match against on your crawl and each page is matched against each regular expression.

You can configure which pages or files are actually analysed during your crawl. For example, you may want 80legs to crawl all the pages it finds, but only analyse pages that end with “.pdf”.

The result files generated by 80legs can be quite large. If the total size of your result file is larger than this setting, then 80legs will split the result file into appropriate chunks before zipping.

This setting also affects result streaming (available on some Pricing Plans). Streamed results will be posted in chunks of this size while a crawl is running. Setting this field to a low number will allow you to retrieve your results more frequently.

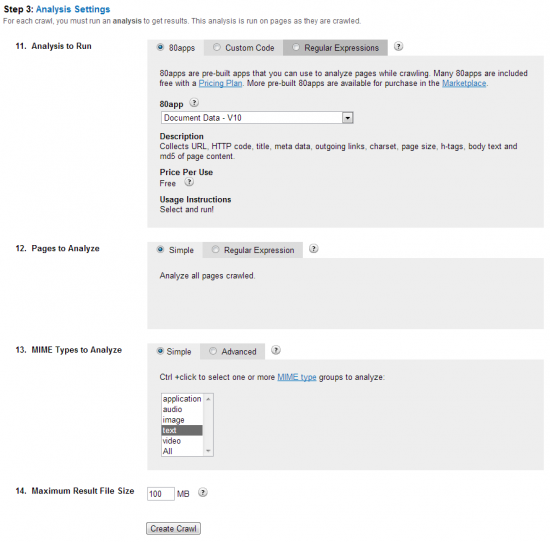

Crawl Results

Upon completion, the crawl results will be available in the “Results” tab of the crawl job. I recommend using the 80legs Java App to retrieve your results which gives you flexibility in the output format of your results. Make sure you download your results before they expire, typically 3 days for free users and a bit longer for paying customers.

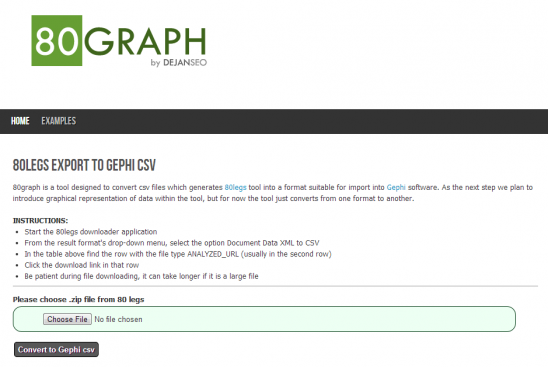

One problem with the output file is that it’s not quite suitable for import into Gephi. Not a problem if you’re a coder, but for the rest of us it might be a bit of a challenge. For this reason Dejan Labs has created a tool which converts the 80legs file into a Gephi-friendly format.

INSTRUCTIONS:

- Start the 80legs downloader application

- From the result format’s drop-down menu, select the option Document Data XML to CSV

- In the table above find the row with the file type ANALYZED_URL (usually in the second row)

- Click the download link in that row

- Be patient during file downloading, it can take longer if it is a large file

80GRAPH

When your download completes upload the .zip file into 80GRAPH:

The output file will be ready for import into Gephi and ready for data visualisation.

Gephi

What’s so amazing about Gephi is that it runs on your desktop and still manages to handle very large site structures without significant issues in performance. I recommend to use a machine with at least 8Gb of RAM and a fast processor for best results.

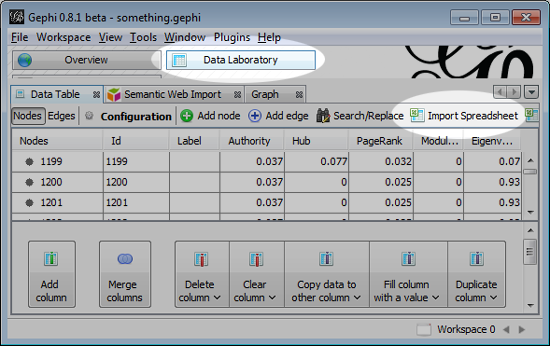

Importing Data

Click on the “Data Laboratory” tab and then go to “Import Spreadsheet”. Browse to your file and import it as “Edges table”.

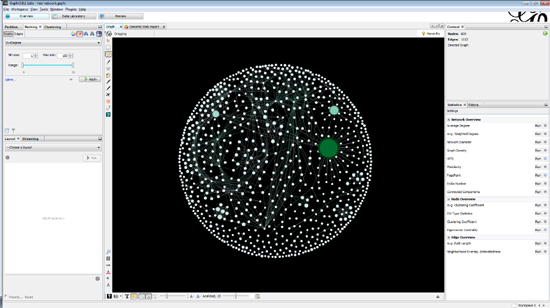

Switch back to your “Overview” tab to see the results of your import. Initially all nodes and edges will be randomly placed on the screen and ready for transformation and processing:

Experiment with different transformations until you get the desired visualisation for your website.

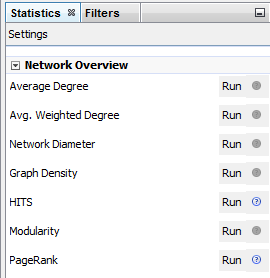

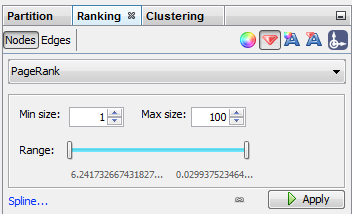

To calculate internal PageRank flow go to the statistics tab on the right and hit “Run” next to PageRank option.

Once you do this you’ll be able to apply these values to size and colouring of your nodes as well:

Here’s an example of custom site structure visualisation, transformed and with PageRank node colouring and sizing:

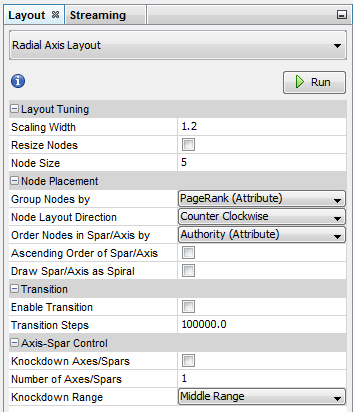

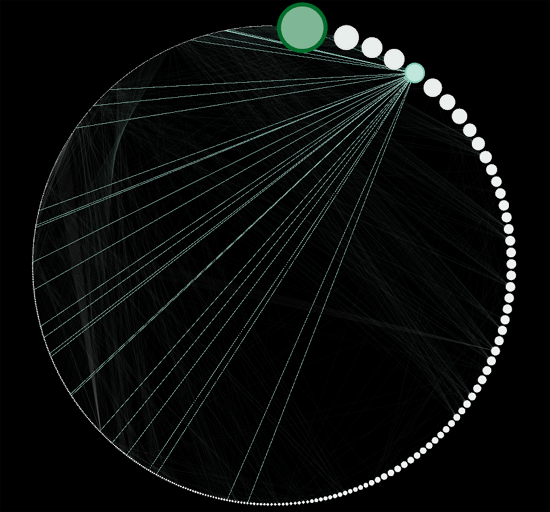

The same overview can be transformed using Radial Axis Layout which illustrates page connectivity and internal PageRank flow:

At this stage the graph is interactive which means you can browse through nodes and edges by hovering over each element and visually inspecting connections (see above). In this graph transformation I chose to sort my nodes by the amount of internal PageRank they receive.

The final touch to your visualisation is in the “Preview” mode. Gephi can produce high quality vector files (PDF, SVG) or a PNG export and it allows you to fine tune the visual appearance of your graph and site visualisation. It may not be any more informative then the interactive mode but it certainly does make a nice presentation for designers or your management team.

Have fun!

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211

oh that’s sexy

Agreed!

Already testing it, but i used only 5 seed URL’s and it will take whole night for the first step to complete.

Woah! Thanks for sharing this Dan. I’ll give this a go ASAP!

Awesome, awesome post. Question though: If you’re graphing page rank, does that provide a good visualization of site structure? Or does it just rank pages by page rank and create edges based on similarity of page rank?

You’re welcome mate.

All I do with PR is for sizing of nodes and colours. In some transformations you can also sort the pages by the amount of pagerank they receive but with so many transformations to choose from I suggest a bit of experimentation before you find the optimal layout. Pro tip: you can combine two transformations, one after another to produce interesting effects.

Thanks. Been doing this for a while – we’ve been doing transformations based on TFIDF and number of links into a page, with the theory that the home page and other main nav stuff will therefore get the most prominent placement.

The problem with pagerank is that you end up with pages that link out a lot losing prominence. That may provide an inaccurate picture of site architecture, and a visualization that doesn’t reflect how the site is, but rather how we theorize Google pictures it. Given that the only pagerank formula we have to work with is pretty old, it’s not always the best way to go.

Great article though – more folks need to start using these tools!!

Thanks for the great article. I am trying to follow the instructions but 80graph doesn’t work for me. I upload the file but it doesn’t return me anything…