Who remembers audio cassettes? To find your favourite song you had to wind the tape while guessing when to stop. Today we can random access our media but there just isn’t a way to know the exact point of interest unless we annotate it manually. So after all these years video and audio content remains inaccessible despite Google’s lofty goal to organise world’s information.

In this article I will make some predictions for the next five years and highlight key technical advancements which will eventually lead to searchable media content.

Basic Principle

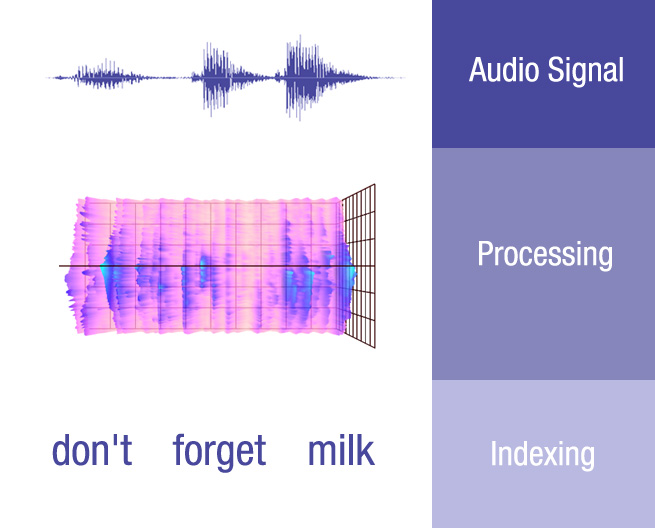

Media is heavy and inaccessible. Text is light and indexable. Google’s first mission is to employ the latest in voice recognition and convert spoken into written word. Once converted into text, information contained within media can be processed and treated using existing natural language processing and semantic methods. This would enable text or voice based search through our media files.

Once text-indexed, media files will be able to undergo semantic treatment and be linked up into special collections meaningful to us in different ways. This will eventually eliminate the need for traditional organising of our media just like Gmail eliminated the need for email folders thanks to its powerful search function. Finally, Google will be able to serve better, more contextual ads in both public and personal content.

Indexing Conversations

Great for settling bets! Those of us who opt into the conversation indexing service could literally index our daily lives. A mobile phone is something that’s always with us. It would be interesting to see how social etiquette evolves to accommodate “always on” phones. We know people didn’t take Google Glass lightly in fear of being recorded. How would you feel about a friend’s phone, sitting there quietly, listening, recording, indexing and categorising? In hangouts we’ve already seen an “off the record” feature. Similar devices could be put in place to disable voice indexation in situations where it would not be appropriate to record.[hozbreaktop]

Voice Calls

In the future we’ll be able to index our phone calls with the approval of our contacts. This would enable us to search for specific terms or people and give us insights and statistics into our communication style. Imagine looking at Voice Analytics with a breakdown of contacts, conversation topics, key terms, durations and so much more. Eventually this type of data will find its way into our personal assistant AI and be able to remind us, suggest, make recommendations or advise in certain situations.[hozbreaktop]

Video Calls

Video calls add a special layer of complexity. Some are just casual catch-ups while others we wish to have recorded. Automatic annotation and transcription of our video conversations will enable us to query using voice or text input and get the exact video call result with the exact spot where our search terms occur.[hozbreaktop]

Team Meetings

Who’s taking notes? Google is. And will be doing so faster and more effective than any human can. Not only will speech recognition algorithms be able to distinguish between different speakers, but will also be able to handle conversation interruptions and participants talking at the same time. Speech recognition itself won’t be credited entirely for this technology but smooth integration of everything else Google. This will include Google+, Hangouts, Calendar, Gmail and YouTube. For example a list of meeting participants could be derived from the calendar entry and their identities and professional roles and preferences asserted from their Google+ profiles. When required we’ll be able to make special formal remarks to go on the record with our virtual note taker. The information recorded will be processed and we’ll be able to gain insights into actionable items, assignable tasks, hot topics, recurring themes and perhaps other interesting analytical bits including speaker dominance and team harmony.[hozbreaktop]

Personal Memos

Entering personal memos will be quite conversational. Google will not only accept voice input but will start asking clarifying questions and start assigning items more freely. It may start editing of our calendar entries and to do lists and even attempt to arrange our day/week/month and warn us of overlaps or dropped deadlines. Personal memo will be able to gain deep insight about us as individuals and recommend things we may enjoy or find helpful.[hozbreaktop]

Collaborative Environment

Daily office buzz. Who knows what type of valuable information may be exchanged during a collaborative session. These informal meetings often go undocumented. What if you forgot an idea or a detail and wish to recall it? No problem. Voice or text search and it will bring up the exact point and context in which it was brought up.[hozbreaktop]

Brainstorming Sessions

Do you think Google will simply write down what you dictate and index it for later search? No way. It will in fact look things up while you ponder on the next big solution and return useful facts and information that may help you make your decision and see things more clearly. This is the point at which Google starts taking a more active role in its user engagement.[hozbreaktop]

How Plausible is Conversation Index?

In the last five years we’ve seen encouraging advancements in the field of natural language processing thanks to combined efforts of Google’s research and engineering teams. Voice search in particular has been brought to a level where it’s truly usable, and that is not a small achievement.

While pure voice recognition made no exciting leaps, added personalisation factors including device location, search context and history converge into a usable system. “OK Google” works surprisingly well, even with noisy environments, varied user accents and vague or ambiguous input.

One of the more quirky features is visible query re-writing as Google’s speech recognition consolidates with contextual data available. In other words it “makes up its mind” on what the best-match query is for that user in that particular moment:

“best love…” -> “best logo quiz” -> “best local cafes”

Google can now transcribe spoken word with an improved level of accuracy thanks to a variety of contextual and personalisation factors.

What if we could index and search our conversations?

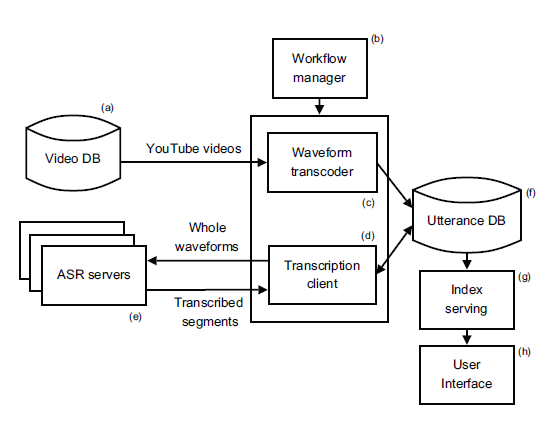

Google has already demonstrated their ability to transcribe, index and query audio via a user-friendly interface:

“We developed a scalable system that transcribes this material and makes the content searchable (by indexing the meta-data and transcripts of the videos) and allows the user to navigate through the video material based on content.”

![]() Glimpses of this technology are available in various forms including automated transcription on YouTube, but we’re still unable to text search through video and audio files like we do with PDF documents today.

Glimpses of this technology are available in various forms including automated transcription on YouTube, but we’re still unable to text search through video and audio files like we do with PDF documents today.

This is potentially due to error thresholds which may still be deemed too high for “Gaudi” to leave the Speech Research Group at Google Labs. With 115 publications, 8 of which are (or to be) published this year, we can safely say the Speech Technology Research team has been busy.

The group focuses on two things:

1. Speaking to our phones should be seamless and ubiquitous.

2. Make video content searchable.

Prior research hints at numerous challenges associated with phone conversation tracking including speaker detection, overlapping speakers, noise detection and filtering. Google already possesses a large corpus of data on YouTube and Hangouts. The missing link is the cellular network. How exciting would it be if Google became a wireless carrier…oh wait…[hozbreaktop]

This was a part 2 in the “Conversations With Google” series.

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211