This article explains how to crawl a single domain and generate a complete list of all external anchor text used.

80Legs

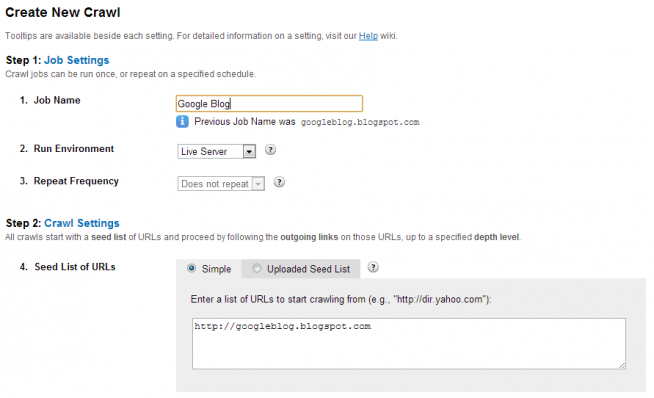

- Log into 80legs and create a new job. You can name it whatever you like.

- Add the URL of the domain you wish to crawl in the “Simple” mode of “Seed List of URLs” field.

- Set the parameters of “Outgoing Links to Crawl” settings (typically the third option)

- Paying customers can change the maximum number of crawls, otherwise leave it at 1000.

- Don’t touch anything else. Hit “Create Crawl” and wait for the crawler to finish the job.

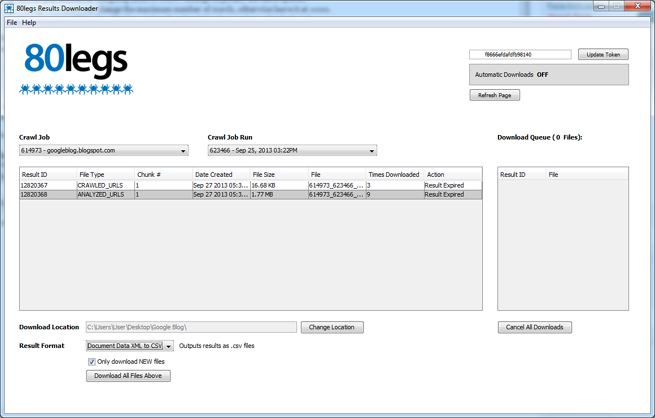

The time to completion depends on how busy the server farm is, but in most cases it takes about 24 hours. Once completed you will be able to download your results by using the Java app. You will need to activate it using your personal security token which is visible on any job results page.

- Load the Java downloader app and select Document Data “XML to CSV” in the results drop down.

- Select which local directory you wish to download to.

- Start download by clicking on the “ANALYZED_URL” row in the available download files.

Note that some post-download processing may take place so be patient until the whole process has been completed. You will notice all temporary files will disappear from the download directory.

Now you have a whole lot of data you can do wonderful things with including external link anchor text analysis, which is what we’ll focus on in this article.

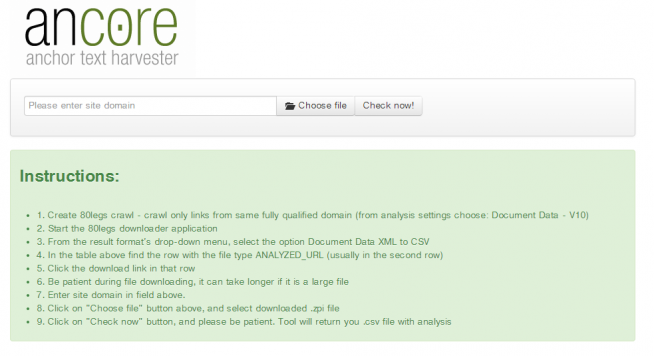

Ancore

Ancore is a simple utility we built which converts the 80legs output file into a usable CSV formatted to show:

- Linking URL

- Linked URL

- Anchor Text

- Canonical

- Nofollow

Processing your file in Ancore is simple. Enter the crawled domain URL, choose the 80Legs export .zip and hit “Check now!” button.

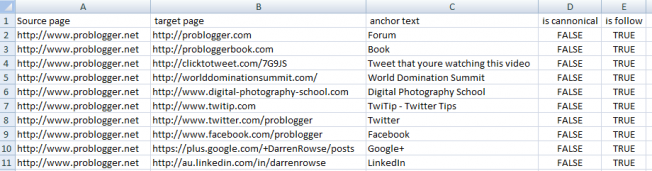

Download will start shortly after that and the output file will contain something similar to this:

Reader’s Challenge

We’re going to give you access to raw anchor text data of Google’s very own blog. Download it and let us know what you can tell about their linking style. Share your observations. How do they link, why do they link?

My initial observation is that Google uses rel=”nofollow” only on one article, and that article talks about nofollow usage.

Spreadsheet Preview:

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211

Hi Dan,

Thanks for sharing! Would you recommend 80legs over Screaming Frog for this very specific purpose? As far as I know Screaming Frog might have a slight advantage in terms of speed…

Hey Dan,

Nice little crawler but, I’m still a big fan of Scrapebox and Screaming frog:P Automator files and learning mode means I can scrape pretty much anything from my Screaming frog crawl ^.^

But to my main question, how did you embed a CSV from G Drive? Still haven’t figured out how to do it 🙁

80legs is not comparable to Screaming Frog… it’s really an industrial-level crawler. Once you’re out of the queue and the job starts it can crawl millions of URLs – and fast.

There’s an export option from Google Apps. File -> Publish to the web.

Thanks for the feedback – it’s what I suspected (or hoped) you’d answer. Screaming frog does a a great job for small to medium sized sites but if you want to crawl a site with more than a 100k URLS it’s not really an option.

Thanks Dan! 🙂

No major revelations from the data, linking style is mainly with brand anchors or natural. It is surprising though how little they link out to external (non Google) properties.

Hi Dan!

Great post. You just explained so well how to crawl a single domain and generate a complete list of all external anchor text used. I would recommend 80legs 🙂 If we want to crawl thousands of URL then I believe that 80legs is what we need.