In our two recent experiments we created a number of pages copied from other domains, both cases got much attention but it seems it also got the attention of Google’s search quality algorithm.

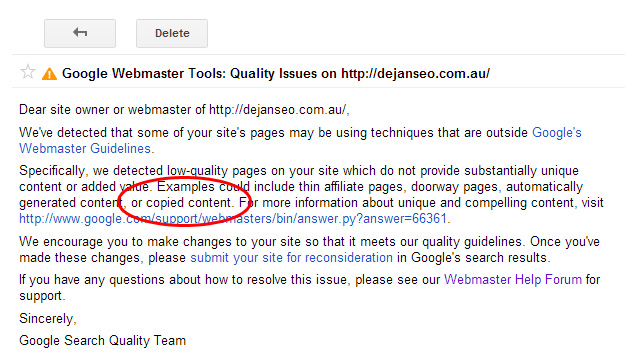

Today we’ve received the following message:

This is good news for all webmasters out there and a sign that Google does care about us and our content.

Further to this Rob Mass, the original experiment participant, has noticed that our page is no longer showing for some searches as Google’s algorithm attempts to resolve the content/page ownership.

Still no comment from Google, but I’m satisfied with the outcome so far and will be taking our experiments down in order to resolve the quality issue created on our website due to the recently added copied content.

Update: All test pages have been stripped down and replaced with the experiment end notification.

- http://rand.dejanmarketing.com/

- http://rob.dejanmarketing.com/ReferentieEN.htm

- http://shopsafe.dejanmarketing.com/

- http://dsq.dejanmarketing.com/

End Message

What Google is telling us here is that if you’re a spammer, sooner or later, you will be caught.

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211

Spammers can just rinse and repeat.

This is a small comfort in a way, at least it shows the whilst the algo treats PR as the greatest authority signal, clearly they have been able to establish who the scraper is… eventually.

For my money this took far too long though, as Alan is getting at, this time window for a spammer burning and churning websites still makes the scraping worthwhile.

So would you be serving a 410 error to get those experiment pages out of the index quickly? Also the question is why if the subdomain is scraping the content does the main domain get the notification?

Because these URLs can also be reached for a subfolder.

Well…. let’s hope Google gets better with time. They seem very busy with catching spam.

No matter what Google does, spammers will keep finding ways around it. I think their job is to patch up issues which affect most users. Small isolated instances of ‘creative’ SEO may not get as much attention.

Dan, you made some noise with your prev. article about copy-paste content on the web. (webpronews etc talked about the article) and than google manually made warring message to your webmaster tools…this is whole true I guess.

How many webmasters have received the “Quality Issues” notification recently, I wonder.. Seems useful if you have lazy copywriters to know there’s a detectable low quality flag.

I thought the same and this is what really worries me. If this is the case that they pay attention genuinely, it’s fine. But I have the feeling that they sent the message and took actions in relation to the articles which had recently appeared with Dan”s experiment. What do you think? Perhaps we should try and not make any noise to see what will happen

Good experiment Dejan. nice to see some useful info coming out of it.

I would assume this happened because the pages were an exact copy .. most people just spin the content and this warning would most likely not appear 🙁

BTW .. did it just result in a warning or did you notice some impact on those duple page’s SERP performance ?

Problem you have is , when G brings out a measure to combat spam, Spammers finds the “next best thing” to go around Google, yes it has become harder for them, however they are still around!

sure Ani, I think that experiment need to be done quietly, and wait some time, (maybe 2-3 months or wait for next panda/penguin update and then make serious, fundamental conclusions. I made some tests and can say that old articles which are specific time/ period related (for example – “Transformes 2 Logo”) after maximum 6 months when take position, retains there long time with minor change (+2, -1…etc ) – results on page 1 of course !

I think that Dejan has the resources to do it well.

Great experiments Rob. I must have missed this in the articles, would this have worked if the domains where actually sub-directories rather than a sub-domain?

Google Take Action

Bee Aware!

Don’t add your country secret your government policies, programs and ratio other wise other develop/under develop country may take advantage of it.

May USA, UK… developed countries government take advantage of your comment and your country’s government policies….

Google Take Action Petition: Add Your Voice to Keep the Internet

Bee Aware!

Don’t add your country secret your government policies, programs and ratio other wise other develop/under develop country may take advantage of it.

May USA, UK… developed countries government take advantage of your comment and your country’s government policies….

Probably sent that message because they read your blog tbf