When Google crawls the web, they try to be smart about how they manage and prioritise their resources. There is variety of clues and signals helping them decide what document to crawl and index and how deep to go on a certain path. The idea is to yield the most value from the crawling capacity available. Some web documents structures go in loops or create infinite structures others have many unique URLs but of really low value to users. Google has made a variety of documents available to webmasters to help us understand their crawling and indexing process.

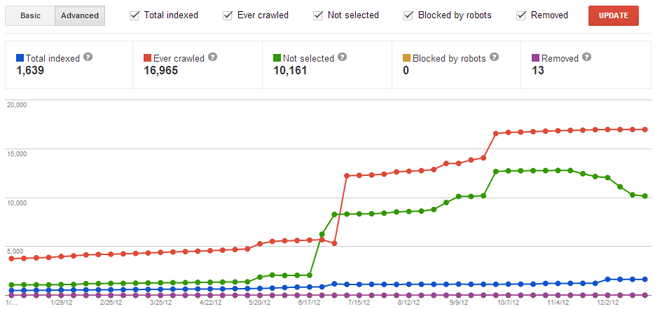

We also have Google Webmaster Tools, and in the “Health > Index Status” section there’s an interactive diagram showing how many pages are in index, how many documents/URLs were crawled in total, how many were removed, blocked and also – not selected.

The “not selected” URLs are basically those who have for one reason or another been ignored by Google.

What a lot of webmasters don’t realise is that Google does the same thing with the link graph. The original PageRank formula works on a relatively simple principle, which was at the time thought to be spam-proof. In time, people realised the value of links and understood the impact anchor text and PageRank had on their rankings. So for years now Google has been refining their core algorithm in order to keep the results as free from manipulation as possible.

As a result Google will look at all your links and “categorise” them so to speak into “selected” and “ignored”. Selected links are valid part of the link graph and they impact websites’ ability to rank, while the ignored links are largely commonly known “fluff” such as domain information websites, parked domains and certain types of other websites which links are of no particular use to Google’s ranking algorithm.

Interestingly a subset of both are also “manipulative” (also known as “inorganic” or “unnatural”) links. From our observations so far it appears that websites with a certain amount of inorganic links go though a granular treatment. For example, a few detected unnatural links pointing to your website may simply be moved to an equivalent of the ignored links, but their record is kept. A slightly more excessive presence of manipulative links over time may lead to an unnatural link warning where Google advises they have ignored certain links, but trust your website as a whole.

At certain point a page may start to see negative impact due to presence of inorganic links, in particular when Google is unsure if they managed to catch and ignore all unnatural link occurrences. Let’s call this a “just in case” scenario.

There are of course stronger actions Google’s algorithm (and the webspam team) can apply to a page or a website, but the purpose of this article is to point out at the fact that some of your links may already be ignored.

This is good to know, especially if you’re continuing to invest your time and money on an ongoing basis, unaware that the links you’re securing (or maintaining) are not a factor in your website’s success in search results.

Here are some common link schemes with common, obvious footprints:

- Paid links

- Your own websites

- Link exchange programmes

- Low quality guest posts

- Distributed articles

- Low quality directories

- Fake user profiles

- Social network or bookmark spam

How can you be sure?

Do a test. If you know that you’ve got some dodgy links take them down and monitor the impact on your rankings. If there is none, it’s likely they’ve been ignored the whole time. So if you’ve been paying for those links, go ahead and re-distribute the funds towards improving user experience and producing useful, engaging content for your site. Remember if there links that you’re unable to take down even if you try, there’s always the link disavow tool at your disposal.

Link Clean-up Tip

Ignored links which do not impact your website in either positive or negative way are not always included in Google Webmaster Tools links section.

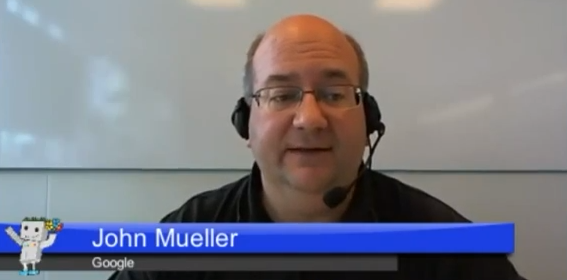

According to John Mueller from Google, “selected” links (including the ones which may be having negative impact) are likely to be included. This means that you can rely on Google Webmaster Tools for hunting down any bad links you may have. John also advises that other link analysis tools may be helpful as well in particular due to their ability to sort, manipulate and export link information in more detail and control.

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211

Though the only really new thing there is the GWT link data and Disavowal method (good tip!), it’s a solid post – good job 😀

I think a little more clarity may help.

In the list of common/obvious items – not all distributed articles are ignored.

Press Releases are a fine example of some that may count.

As per usual – it seems to be dependant on Quality and Volume.

(I honestly doubt G will consider all 200 links from 200 reprodcutions – but I don’t think they discount all 200 either … the evidence suggests that at least some of the more prominent sites get used)

Link Exchanges is another questionable one.

How to tell the differenfce between an exhange and reciprocation based on respect/appreciation?

Aside from volume, location/destination and timing … all of which can be fiddled with … the only other indicator I can think of is seeing a simialr pattern repeat itself.

So the key there is to only dfo it a little, infrequently, with a random delay and monitor the pages/link text etc.

And then we have mutual sites.

I call Google out on this one – as I’ve seen sites benefit from links from their own sites … and I happen to know that G may well have a threshold of sorts for certain patterns/limits.

I wonder how many SMBs know how to find out if they have “bad links + what to do about it?

if you don’t partake in common link schemes with common, obvious footprints:

Paid links

Your own websites

Link exchange programmes

Low quality guest posts

Distributed articles

Low quality directories

Fake user profiles

Social network or bookmark spam

…. then you’re probably OK

according to SearchMetrics ♦ 5 of the Top 6 ranking factors are “social”

social signals + social shares are becoming way more influential in ranking a web page

those who create + curate + syndicate killer content that gets liked + shared + plus 1’d and is connected to your G+ rel=author credentials

the more social signals coming from your Author Rank creds ♦ the more your AR will improve and impress Google

I like how Rachel defined the currency of the new economy in her awesome TED Talk

http://www.ted.com/talks/rachel_botsman_the_currency_of_the_new_economy_is_trust.html

The currency of the new economy is trust