Knowledge Vault is a probabilistic knowledge base developed by Google.

Based on machine learning, Knowledge Vault is not only capable of extracting data from multiple sources (text, tabular data, page structure, human annotations) but it also infers facts and relationships based on all data available. The web of course contains massive amounts of false data so the framework relies on existing knowledge bases (e.g. Freebase) in order to validate facts during one of the steps of its assessment process. The researchers describe the process as “link prediction in a graph” and attempt to solve it through employing two different methods: a) path ranking algorithm (PRA) and b) neural network model (MLP) [1].

Anatomy of Knowledge Vault

Main components:

- Extractors – extracts triples (subject, predicate, object) from large data sets and assign confidence scores.

- Graph-based priors – learning from existing knowledge bases

- Knowledge fusion – final score on factual probability

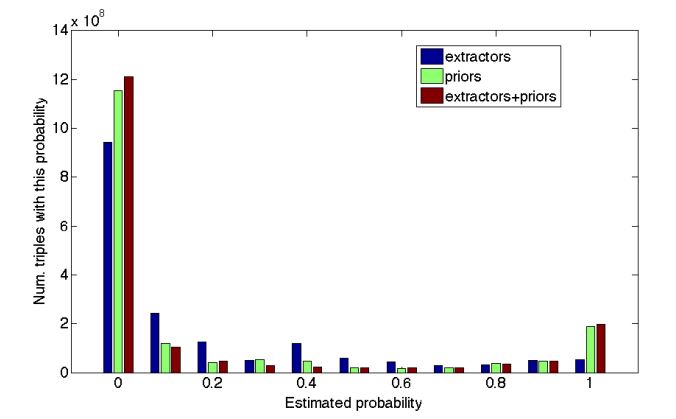

By combining the existing knowledge bases and data from their own extraction process researchers have managed to reduce the amount of fact uncertainty as illustrated in the graph below:

Is Knowledge Vault Replacing Knowledge Graph?

Apples and pears I guess. The key advantage of Knowledge Vault against current Knowledge Graph is in the way its design allows it to scale. So far, KV managed to infer some 271,000,000 “confident facts” which are considered true with at least 90% probability. In order to distill these facts KV extracted 1,600,000,000 triples, 4469 relation types and 1100 kinds of entities.

While the amount of useful facts at this stage doesn’t measure up to the amount of data in the Knowledge Graph this could quickly change in the future. Google may choose to integrate KV as the automated knowledge harvesting component of their existing framework and continue referring to the lot as the “Knowledge Graph”.

Felicity Nelson from Science Alert sees KV as part of Google’s future role as a personal assistant [2].

I agree.

References:

[1] Knowledge Vault A Web-Scale Approach to Probabilistic Knowledge Fusion

Knowledge Vault: A Web-Scale Approach to Probabilistic Knowledge Fusion

Recent years have witnessed a proliferation of large-scale knowledge bases, including Wikipedia, Freebase, YAGO, Microsoft Satori, and Google’s Knowledge Graph. To in-crease the scale even further, we need to explore automatic methods for constructing knowledge bases. Previous approaches have primarily focused on text-based extraction,which can be very noisy. Here we introduce Knowledge Vault, a Web-scale probabilistic knowledge base that combines extractions from Web content (obtained via analysis of text, tabular data, page structure, and human annotations)with prior knowledge derived from existing knowledge repositories. We employ supervised machine learningmethods for fusing these distinct information sources. The Knowledge Vault is substantially bigger than any previously published structured knowledge repository, and features a probabilistic inference system that computes calibrated probabilities of fact correctness. We report the results of multiple studies that explore the relative utility of the different information sources and extraction methods.

Research Team: Xin Luna Dong, Evgeniy Gabrilovich, Geremy Heitz, Wilko Horn, Ni Lao, Kevin Murphy, Thomas Strohmann, Shaohua Sun and Wei Zhang

[2] http://www.sciencealert.com.au/news/20142308-26058.html

Google’s Knowledge Vault already contains 1.6 billion facts

“In the future, virtual assistants will be able to use the database to make decisions about what does and does not matter to us. Our computers will get better at finding the information we are looking for and anticipating our needs. The Knowledge Vault is also going to be able to find correlations that humans would miss by sifting through enormous amounts of information. This could provide the means for massive medical breakthroughs, discovery of trends in human history and the prediction of the future.”

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211