- Google utilises user behaviour signals to evaluate quality of their results.

- Traditional search quality analysis models are not fit for analysis of modern SERPs.

- CAS is a new user behaviour model developed by Google (clicks, attention, satisfaction).

- The new model jointly captures click behaviour, user attention and user satisfaction.

- It works better than previous evaluation methods particularly with non-linear layouts.

Paper: Incorporating Clicks, Attention and Satisfaction into a Search Engine Result Page Evaluation Model

Towards Non-Linear SERP Layouts

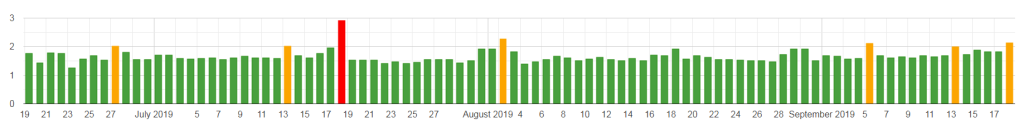

In a plain ten result page, user attention and behaviour patterns can be predicted with ease. For example, position-based click bias is well understood and this is why search engines can (and do) use CTR as one of the quality evaluation metrics. Things get trickier with more complex result layouts.

Contemporary non-linear SERP layouts change dynamically based on topic, context, timing, location, personalisation and many other factors.

This represents a challenge in quality evaluation, not just due to non-trivial behaviour patterns but also a phenomenon Google calls “good abandonment“.

What is “Good Abandonment?”

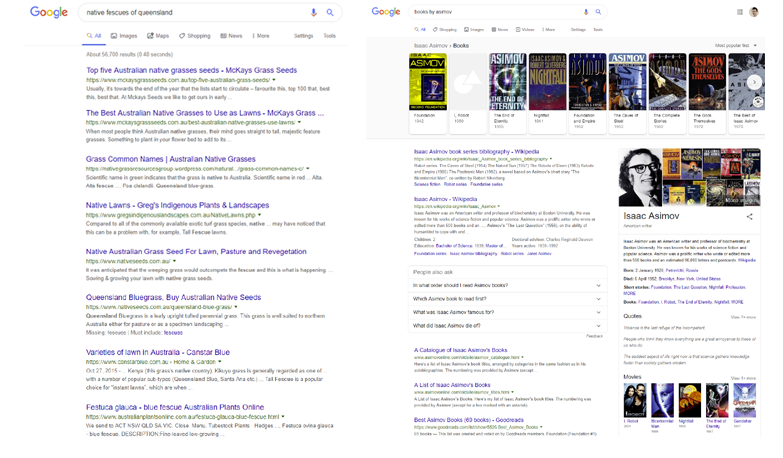

Good abandonment is when users leave search result pages without clicking on anything but satisfied. In other words, the utility ends with Google, whether it be through performing an action or retrieving information.

Some examples of queries which may lead to good abandonment:

In the past, SERP abandonment has been treated as a poor experience signal. Now it’s probably a 50-50 case.

This is what Google’s CAS model is all about, understanding user behaviour in the context of the evolved SERP.

If Google’s thinking about it, so should we.

Practical Takeaways

Implications for Long-Tail Traffic

Google researchers consider long tail queries to be of informational rather then navigational nature.

Since the user is looking for information, they may well be satis ed by the answer if it is presented directly on a SERP, be it inside an information panel or just as part of a good result snippet.

This mindset should be a red flag as it hints at type of mindset within Google that justifies “good abandonment” despite the obvious lack of benefit to publishers.

Here’s the excerpt from an article I wrote in 2013, sadly predicting everything that is happening now:

Google is an information company and their job is to provide answers to our questions. If your business is based on the same model, then sooner or later you will be out of the game. Google has no ambition to show more search results in their search results. This is not being evil, it’s being efficient and giving your users the best answer, and quickly.

Who is at risk?

Any business which provides answers to questions including, but not limited to:

- Data, product and service aggregators

- Flight, hotel, car rental search and booking sites

- Price comparison, weather reports, sports results

- Event calendars and various other data-driven websites

- Domain information, IP address lookups, time/date and currency conversion.

Who is safe?

Original producers or content, products, tools and services will continue to enjoy the benefits of organic search traffic. One thing to watch out for is that your data is not detached from your brand and used to present to your audience directly through search results. Some already fear this might be taking place through rich snippets and the knowledge graph.

In Between

At this stage Google is incapable of providing well-organised, curated content or meaningful advice or opinion. In the next ten years or so we will still see human-generated content as dominant producer of this type of information and advice. The decade after that, however, will challenge this and we will see artificial intelligence assume a more meaningful role in content curation, opinion and even advice.

Implication for Click Models and Traffic Projections

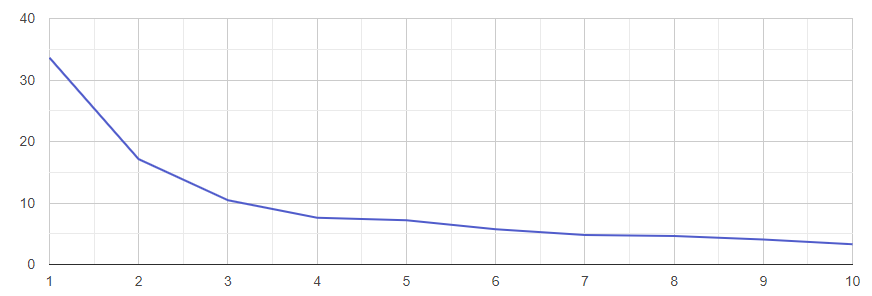

CTR optimisation is an integral part of every good SEO campaign. The objective is simple – “Same Rank, More Clicks”, and this is done by making titles, description and schema more appealing to users.

But here’s the thing, in order to understand what works and what doesn’t work – we need an effective benchmark and reliable metrics. Unfortunately that’s no longer the case.

Here’s why..

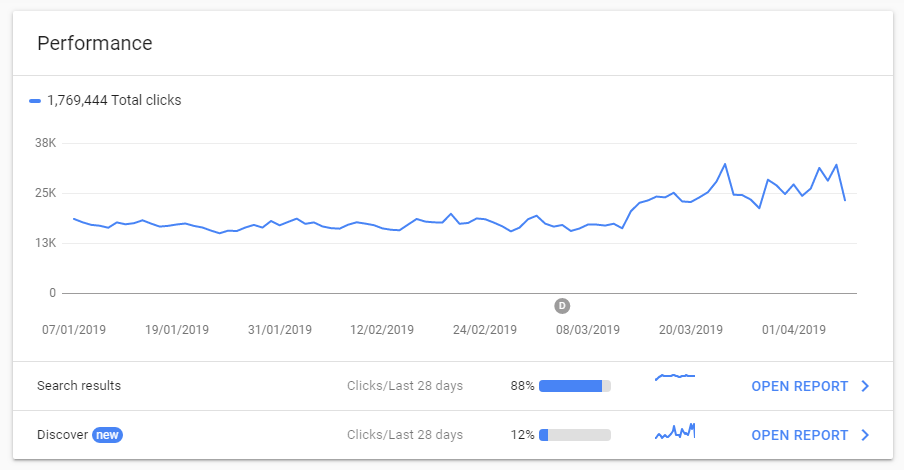

Below is a CTR distribution curve of a large travel website:

Calculated Using: https://www.phraseresearch.com

This is the data behind the CTR curve:

| Rank | Average CTR | Keywords | Instances |

| 1 | 33.62% | 112 | 3550 |

| 2 | 17.11% | 838 | 12910 |

| 3 | 10.46% | 897 | 11592 |

| 4 | 7.59% | 701 | 8344 |

| 5 | 7.19% | 430 | 5574 |

| 6 | 5.75% | 216 | 3944 |

| 7 | 4.81% | 189 | 4200 |

| 8 | 4.62% | 222 | 4816 |

| 9 | 4.06% | 182 | 2906 |

| 10 | 3.28% | 174 | 3408 |

CTR averages for this website can be used as a benchmark helpful in detection of CTR anomalies (both positive and negative) flagging severely underperforming pages whose CTR lags behind the website average. Likewise, it helps detect pages with above average CTR and discover snippet optimisation patterns otherwise buried in the massive amount of data.

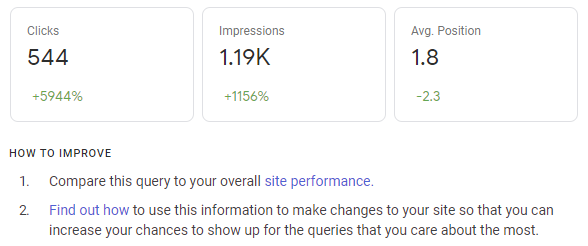

Google suggests we do this too and webmasters can see the following in their search results by saying “compare this query to your overall site performance”:

How Google Can Help Webmasters

While we do know the position of our result we still lack the deeper context of a contemporary SERP which creates a lot of false positives.

Why is there such a bad CTR? Is it a bad snippet on my page or is it Google? Was there a knowledge panel? Carousel? Local results?

We just don’t know.

To make meaningful decisions that lead to meaningful improvements, Google’s Search Console needs to show more data.

Specifically they need to include special SERP feature data and filter options.

Thanks Google, we count on you!

№1 ≠ №1

Search result layout is a bigger traffic factor than rankings.

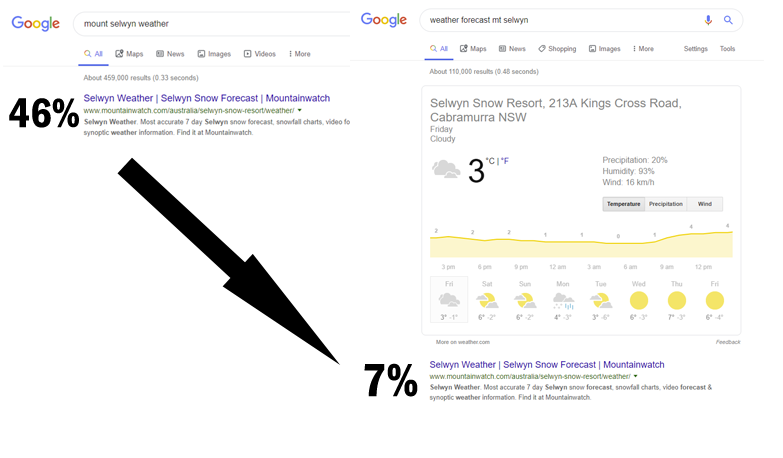

In this one instance, Google forgot to include the weather panel for one query which allowed me to run a CTR comparison on both:

Intent-wise we’re looking at nearly identical queries and the same target URL in SERPs with CTR difference of 39% simply because of the presence of one special SERP feature.

This is what Google calls “good abandonment”. While it’s certainly “good” for Google’s users, “good” is not how the #1 result on this page would describe this situation.

Negative CTR Influencers

Your results will receive fewer than average clicks in search results if there is at least one special search feature in the results. Combination of multiple special features only makes things worse.

The list includes (but is not limited to):

- Search box auto-complete

- Features Snippets

- Calculators

- Answers Box

- Local Results

- Knowledge Panel / Carousel

- Image Results

- Video Results

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211