Updated: 28/11/2025

After sharing on two world stages for the most incredible and important conferences – I bring you the updated technical SEO’s adaptation for AI visibility. Please go follow, support and invite all of your friends to attend WTSFest by the incredible Women In Tech SEO community and the Shenzhen SEO Conference. It was an incredible experience to share the stage with so many talented individuals and to feel the energy and curiosity from all of you in the audience. I had so many great questions after my talk, and so many people asking for my slides that I decided I’d write a blog post sharing them and exploring each facet deeper.

Please reach out to me if you have questions, the team and I at Dejan are in this because we love search, we love learning, thrive with curiousity and strive to achieve the best results for our clients.

We’d love to hear from you! ^v^

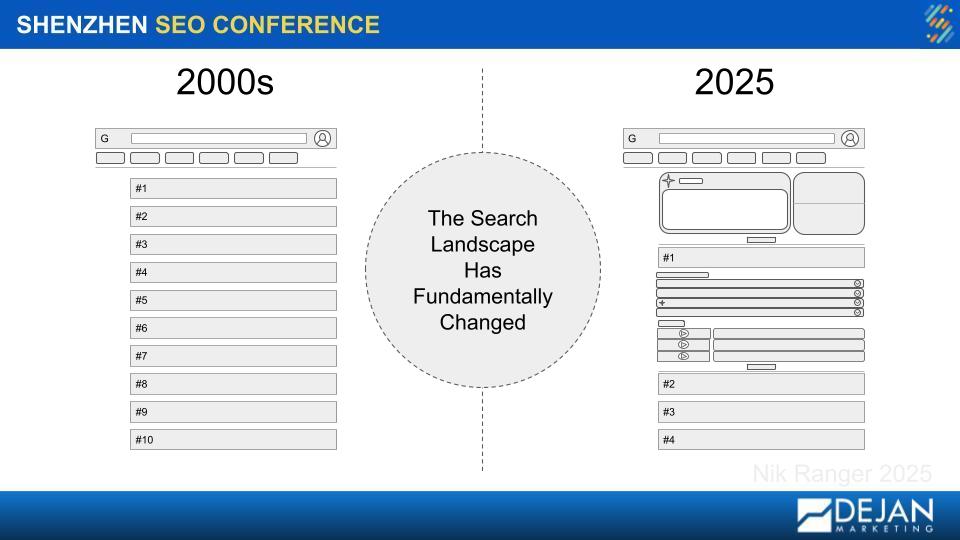

The Search Landscape Has Fundamentally Changed

It’s no secret that the search engine results pages (SERPs) of today looks drastically different from the ten blue links we saw in the early 2000s, we’ve moved from a simple list of websites to a dynamic, conversational, and answer focused experience.

At their core, search engines are built on the principle of information retrieval, which have evolved beyond simple keyword matching. They now operate on a semantic level, understanding queries as a collection of real world entities (entities, being people, places, concepts), and the relationships between them. This understanding is powered by vast knowledge graphs, which act like a structured map of facts, connecting these entities and defining their attributes. We’re no longer just looking to find pages containing your words, but to retrieve and present information centered on the specific entities and concepts users are interested in, leading to far more accurate and contextually aware answers.

In 2018 at Goolge I/O Keynote they annouced their core objectives for how they looked to position the future of search to be predictive, conversational and personalised. A vision made even more clear with the recent annoucement September 18th of further integrating Gemini into the Chrome user interface.

[To understand how the modern search engine has evolved, scroll through this really helpful overview of how Google has worked over the past 20 years to develop, train and integrate AI into the search experience.]

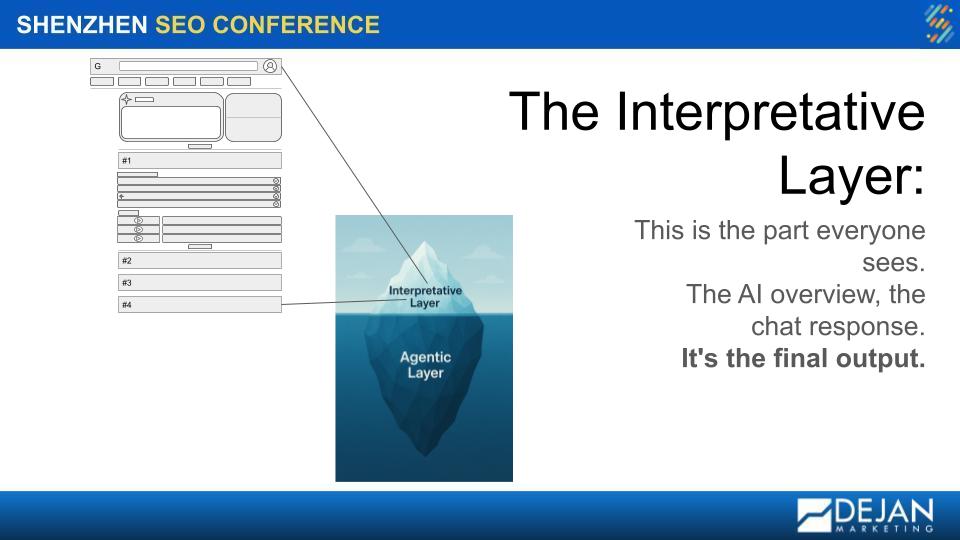

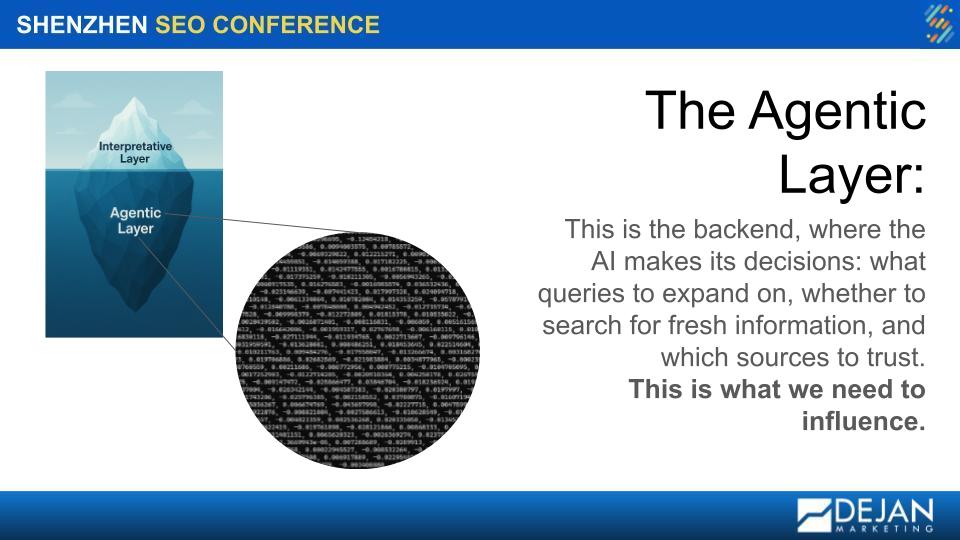

The Two Layers of AI: Interpretative vs. Agentic

To really get a grip on this new landscape, we need to understand that AI in search operates on two distinct levels:

- The Interpretative Layer: This is the part everyone sees and talks about. It’s the AI Overview at the top of the SERP, the response you get from a chatbot. It’s the final, polished output.

- The Agentic Layer: This is the back end, the engine room where the real decisions happen. This layer determines what queries to explore, whether it needs to search for fresh information, and which sources it should trust. Crucially, this layer is not driven by clicks, it’s model is steered by suggestions and associations it has confidence in. This is the layer we need to influence.

I highly recommend you read Dan Petrovic’s post on how SEOs should think about AI models.

As SEOs, our job has evolved. It’s no longer enough to simply rank; we’re on a two-part mission of understanding and control. I chose to use the words ‘understanding’ and ‘control’ very deliberately in my presentation, as they’re actually specific concepts in machine learning, and the pillars of our work.

‘Understanding’ is our pursuit of mechanistic interpretability, the ability to reverse engineer an AI’s decision making process to see how and why it produces a specific answer, effectively mapping its internal logic.

‘Control’ is the application of that knowledge through model steering, where we work to derive meaningful query fan-outs designed to reinforce specific neural pathways and shape the AI’s perception of our client’s brand. This is how we’re moving away from reacting to search results to proactively influencing the complex systems that generate them, hopefully allowing us to build a more predictive, measurable authority for our clients.

Highly recommend to read and learn more about understanding and control, from Dan Petrovic’s post.

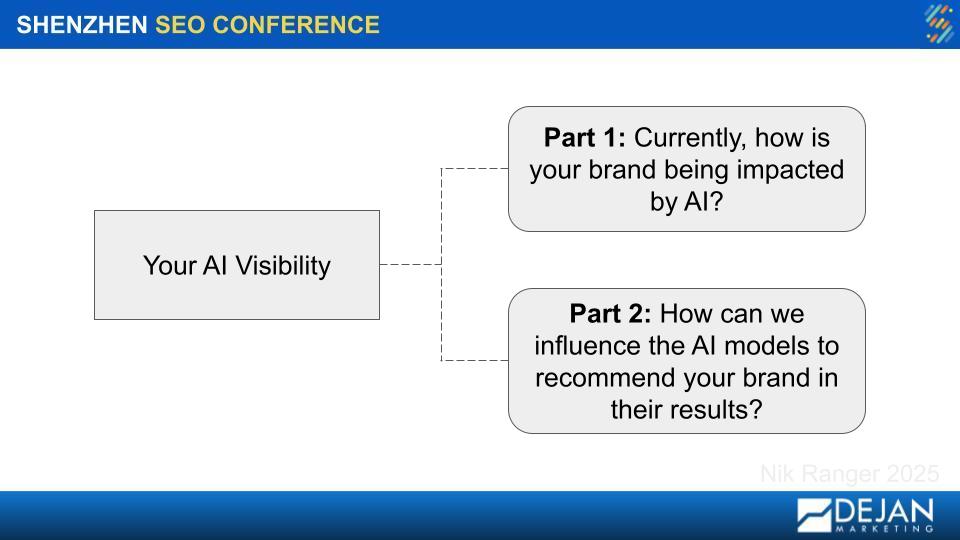

Now, when thinking about AI Visibility, I first asked myself two questions I wanted to answer:

- Question 1: Currently, how is my brand being impacted by AI?

- Question 2: How can I influence the AI models to recommend my brand in their results?

From talking to you guys, I think you had the same questions!

Part 1: How Is Your Brand Being Impacted By AI?

This is the first and most important step. To be able to accurately attribute whether or not your brand is being impacted by AI, I first want to understand from you what is driving the revenue of your business, what markets you care about, who your audience and what motivates them and map your queries by intent and patterns to truly be able to give a meaningful diagnosis.

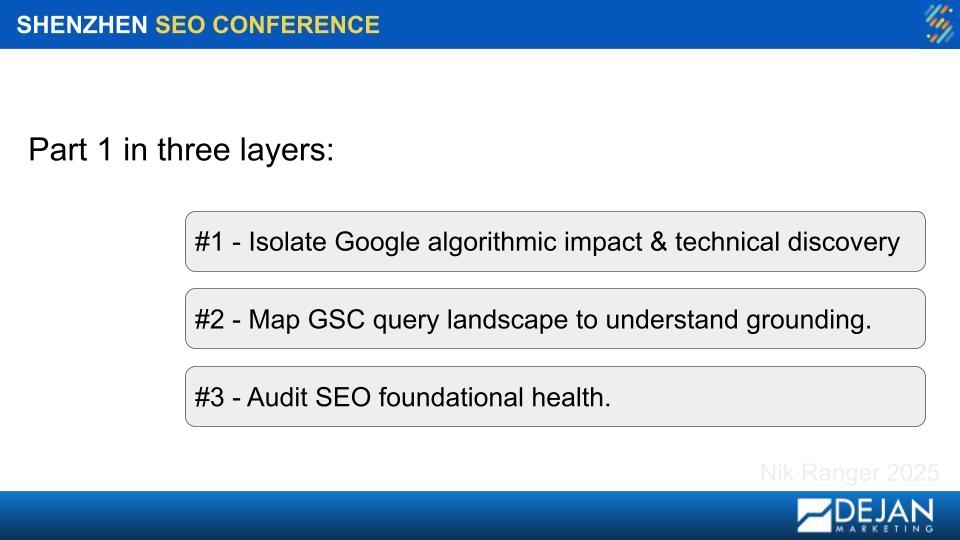

I want to understand and prove the “as is” reality of where your brand stands in the mind of the machine. I approach this diagnosis in three layers.

Layer 1: Isolate Algorithmic Impact (WIMOWIG) & Technical Issues

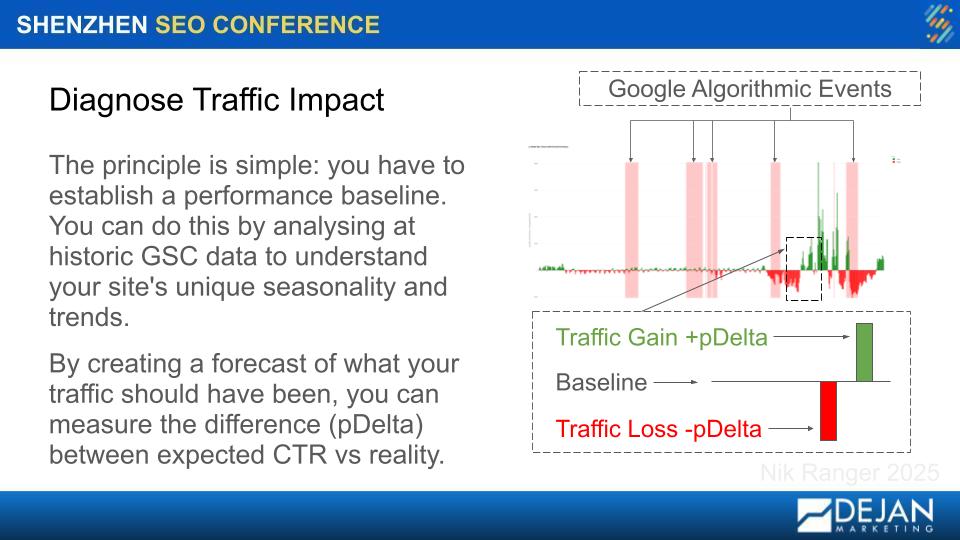

With today’s complex and frequent Google updates, the first question we always have to answer when traffic changes is: Was It Me Or Was It Google? Or for short: WIMOWIG.

Developed by Dan Petrovic, WIMOWIG leverages Prophet forecasting algorithm, the same machine learning technology used by companies like Uber and Airbnb for demand prediction to create baselines of what your website’s performance should have been based on historical patterns, seasonality, and trends. The system analyses your search console data going back 600 days to establish robust statistical patterns, then generates forecasts that account for weekly seasonality, monthly trends, and long term growth trajectories.

The key insight comes from the p-Delta calculation (performance delta), which measures the difference between your actual clicks, impressions, and rankings versus what our model predicted they should have been on any given day. When we overlay this analysis with Google’s official algorithm update timeline, we can scientifically quantify which algorithm updates actually impacted your site and by how much. From there, I go and analyse the data, extract queries from the impacted date ranges I want to analyse and look for patterns and anomalies in queries, their respective pages and search engine result pages to form hypothesis for potential impact.

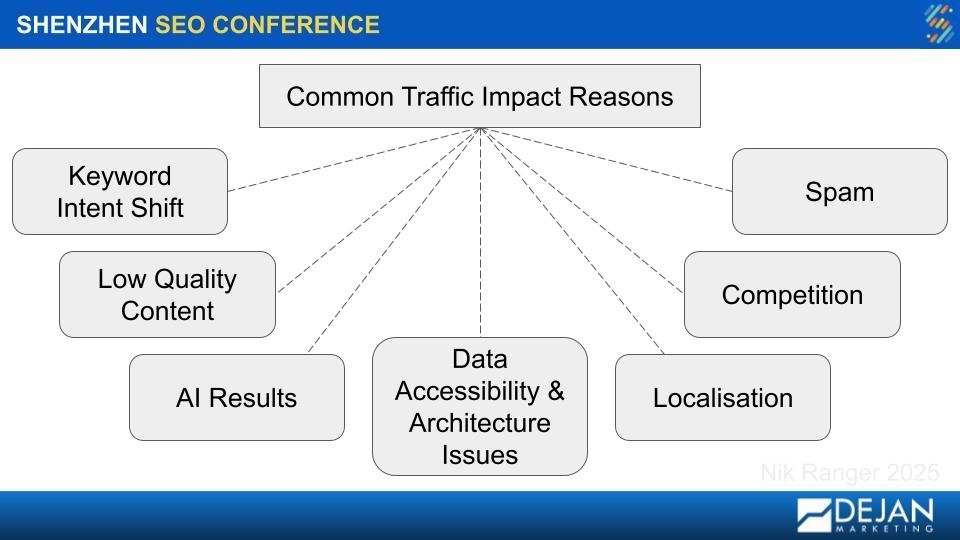

Commonly, I see traffic fluctuations caused by:

- Data accessibility and architectural problems

- A shift in keyword intent

- AI generated results

- Localisation

- Competition

- Spam issues

- Low quality content

With these hypotheses, I carry them throughout a thorough, deep technical discovery audit that unpacks the crawlability, testing the rendering, indexability and critical signals that ensure data accessibility and bot accessibility for your site. This deep technical audit is where I pressure test data accessibility and bot accessibility, because it’s critical that both traditional search engine crawlers and the bots feeding AI models operate on a simple principle: if they can’t access your content, it effectively doesn’t exist.

Throughout this process I examine the entire crawling and rendering pipeline, from robots.txt directives and XML sitemaps to the complexities of javascript execution to ensure bots see the same rich, complete content that a user does. A single misconfiguration can render your most important pages invisible, effectively locking them away. In the context of AI, this means your authoritative content will never be considered for a grounded response or an AI Overview, as it simply won’t be found during the live retrieval process. This audit, therefore, isn’t just a box ticking exercise, it’s the foundational check that ensures that your content has a chance to compete in the first place. I love finding the root cause of problems so I can understand why sites have performance ceilings or blocks and be able to fix them. That’s the whole reason why I decided many years ago to specialise in technical SEO!

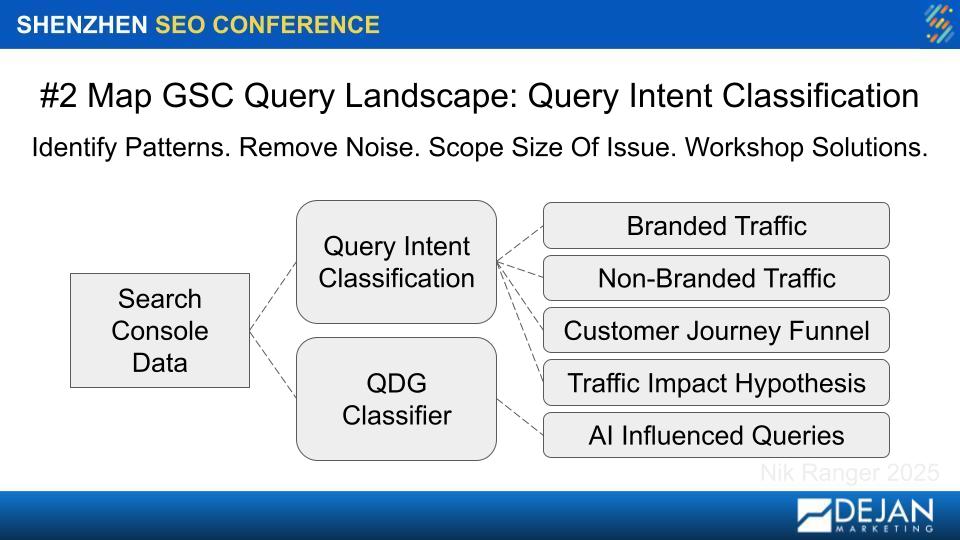

Layer 2: Mapping Search Console Queries By Search Intent and Grounding Classification

Once I’ve accounted for major algorithmic shifts and throughly audited the technical architecture of your site, and have a list of hypothesis that I want to test, I then think about query intent classification and query needs grounding classification for all of your meaningful search console queries. What that means, is that I want to take all of your GSC (google search console) data and segment them into different types that mean specific things to your customers to identify patterns. I tend to split it by branded vs. non-branded traffic for a helpful overview, split out and map the customer journey, separate patterns that form my hypothesis about what’s impacting traffic and run our Query Demands Grounding Classifier to see what queries are used by AI models in their results.

To learn more about this, please read Dan Petrovic’s post on Query Intent Classification.

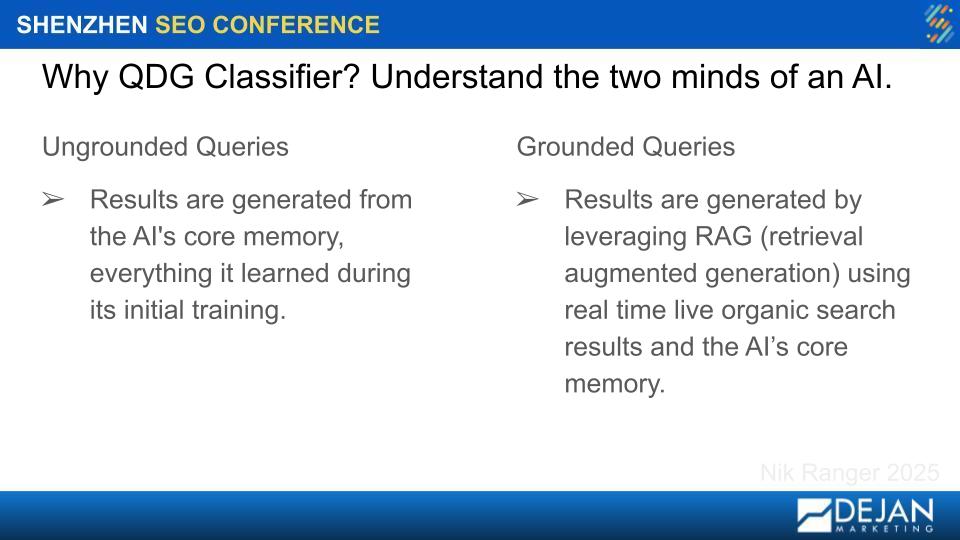

A critical part of this is our Query Demands Grounding (QDG) Classifier. The model that Dan Petrovic trained is essential to help us understand the two minds of the AI:

- Ungrounded Queries: The AI answers these from its core memory, the static data it was trained on.

- Grounded Queries: The AI uses Retrieval Augmented Generation (RAG) to perform a live search and combine those real time results with its core memory to generate an answer.

As an SEO, this is where we have power. You can directly influence the results of grounded queries. And the great news? Our analysis shows that around 91-96% on average of queries across most businesses are grounded for ChatGPT and 100% of queries for all businesses are grounded for Gemini. This means traditional SEO signals like technical health, content quality, and links are more important than ever for influencing AI.

Want to learn more about this? To understand when Gemini uses Grounding for user queries, read Dan Petrovic‘s post.

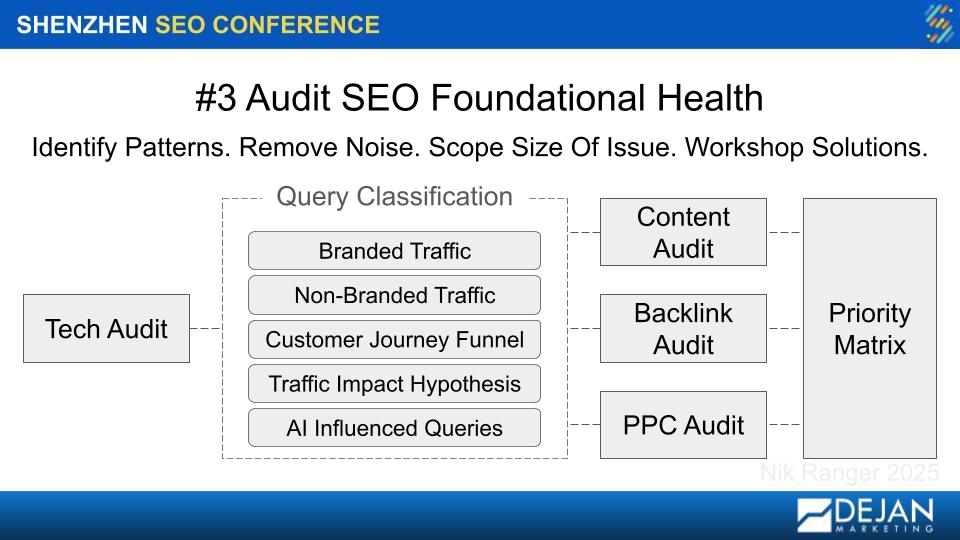

Layer 3: Audit Your Foundational SEO Health

With a clear picture of the algorithmic and AI driven landscape as well as a thorough technical discovery audit, the final diagnostic step is to conduct thorough content, link audit (internal and external links) and PPC audit to see the campaigns and queries customers are bidding on and evaluate the queries you’re testing. By identifying patterns and removing the noise, we can scope the size of the issue and begin to workshop solutions. Like I said before I care deeply about getting ideas and fixes implemented, and with precious resources being scarce like time and money – I want to make sure that we have a complete view of diagnosis, your business and your customer audience so we can give you a priority matrix. Time is money, so let’s do things that will actually drive results!

This diagnostic process might reveal that your traffic drop wasn’t because you were absent from AI Overviews, but because of a keyword intent shift or a penalty. Knowing the true root cause is everything.

Part 2: How to Influence AI Models

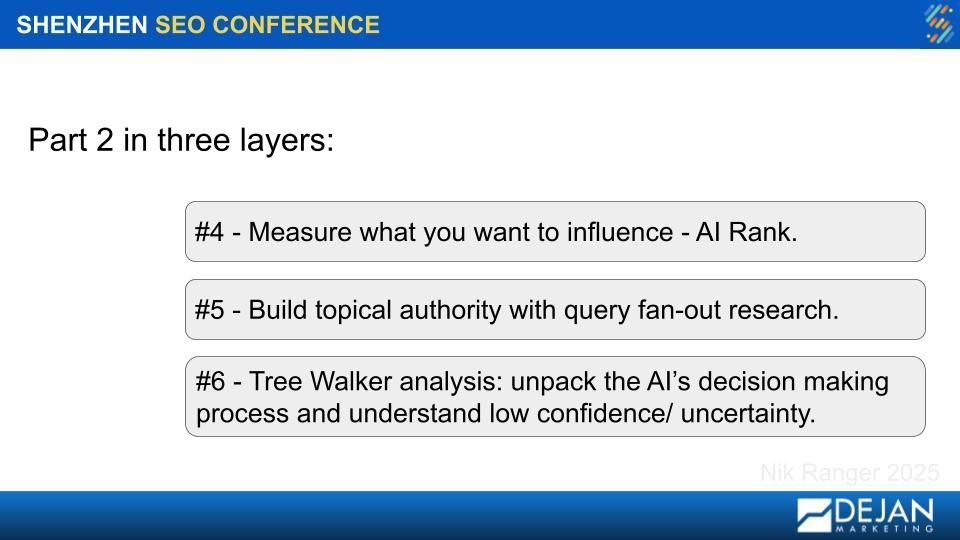

To influence AI models, we employ a precise, three part analysis that begins with AI Rank to continuously measure our brand’s perception and establish a clear KPI. We then leverage Query Fan-Out to strategically build comprehensive topical authority, ensuring we answer every relevant question an AI agent might have. Finally, we use Tree Walker analysis to deconstruct the AI’s decision making process, pinpointing areas of low confidence that we can strengthen with strategically meaningful content and messaging.

We’ve found this workflow is not just efficient, it’s an extremely powerful methodology tooled specifically for each client so we can focus only on what the data reveals is necessary, having taken the time in the first phase to understand the business, the services or products and the target audeinces, for an approach so adaptable that, when required, can extend to training bespoke models on a client’s own data to solve their most unique visibility challenges. 2025 and beyond is just that cool, this is truely awesome work!!

Ok, so the three part analysis, let me explain deeper.

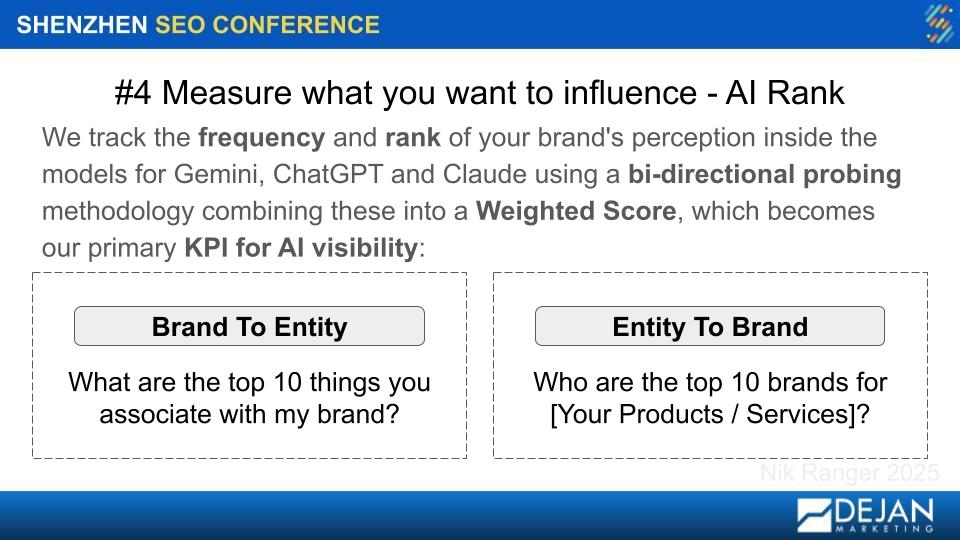

Layer 4: Measure What You Want to Influence with AI Rank (FREE)

You can’t improve what you don’t measure, but traditional rank tracking is no longer sufficient because AI models don’t think in keywords, they think in a complex web of brand and concept associations.

This is why we developed AI Rank, our FREE AI visibility tracking tool, which is built on the exact principle we explored in our workshop exercise. By systematically probing the models with bi-directional prompts asking ‘what do you associate with my brand?’ (Brand to Entity) and ‘what brands do you associate with my core service?’ (Entity to Brand), which we can use to map the AI’s perception of your brand and your competitors. AI Rank automates this process, tracking these associations over time to provide a measurable, weighted score of our true visibility in the mind of the machine.

To understand how AI Rank works, Dan Petrovic is the architect and has written a fantastic insight analysis on his work. https://airank.dejan.ai/

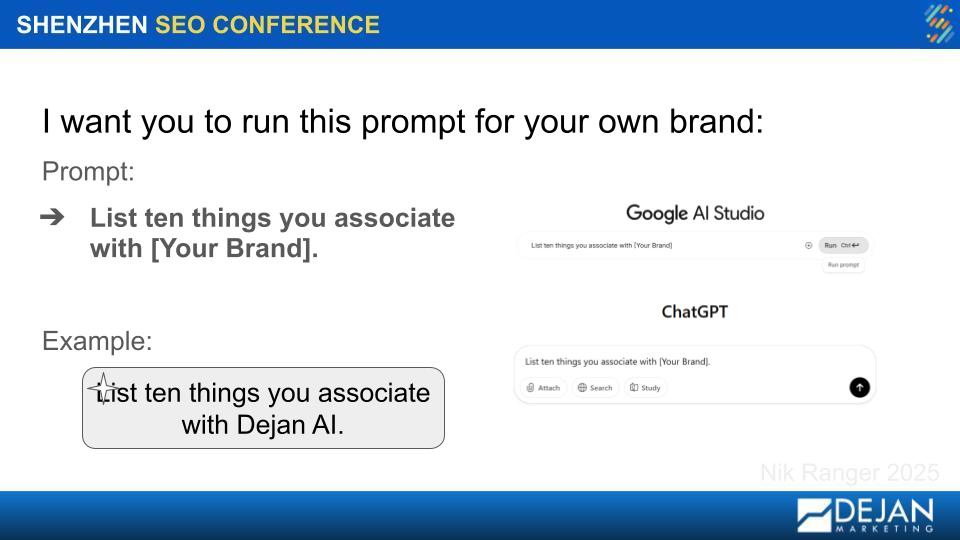

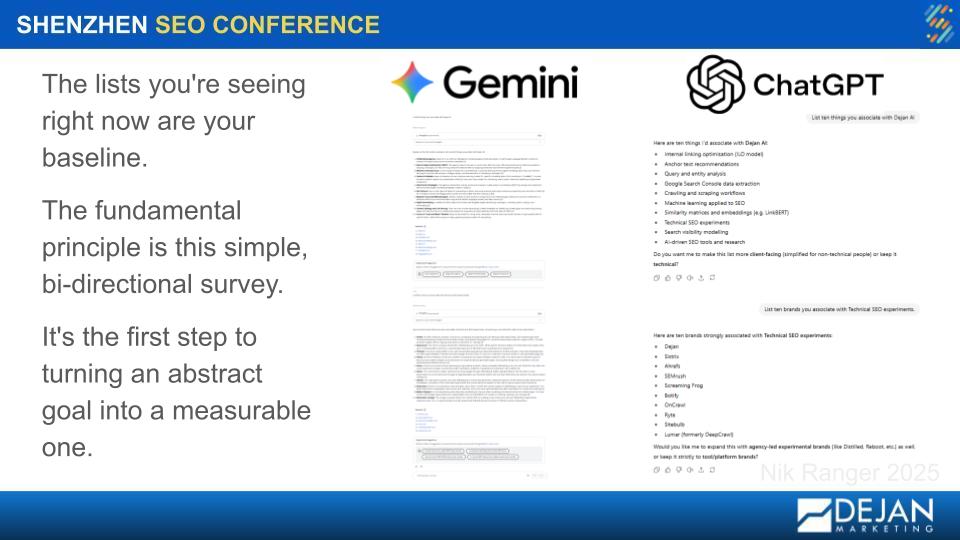

Let’s do this quick workshop exercise together, just as we did in Shenzhen! Now to help you understand what this means, I created a workshop for people in the audience which we can follow along at home. This is so you can understand the fundamental concepts really quickly.

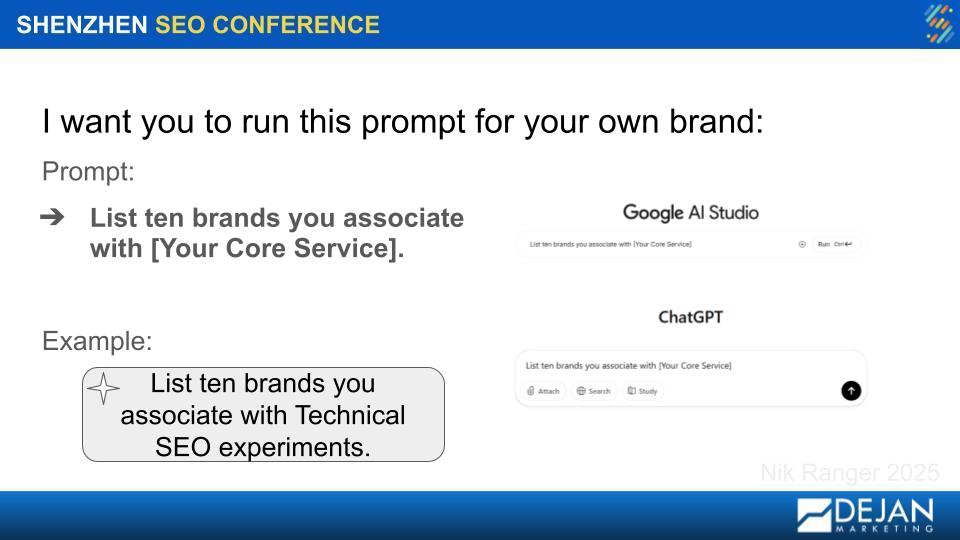

Open your preferred chatbot (Gemini or ChatGPT) on your phone.

Prompt 1: List ten things you associate with [Your Brand].

Prompt 2: List ten brands you associate with [Your Core Service]. Now, look at this list. Are you on it? If not, who is? Why do you think the AI associates them with that service instead of you?

This simple, bi-directional survey is the fundamental principle behind our AI Rank tracking. We run these prompts at scale, across multiple models (Gemini, ChatGPT, Claude), to create a weighted score that becomes our primary KPI for AI visibility. It turns an abstract goal into a measurable one.

Layer 5: Build Topical Authority with Query Fan-Out

AI Overviews don’t just cite a page with the right keyword; they cite the page that is the most definitive and comprehensive answer for an entire topic. To become that source, we have to shift from keyword research to topical modeling.

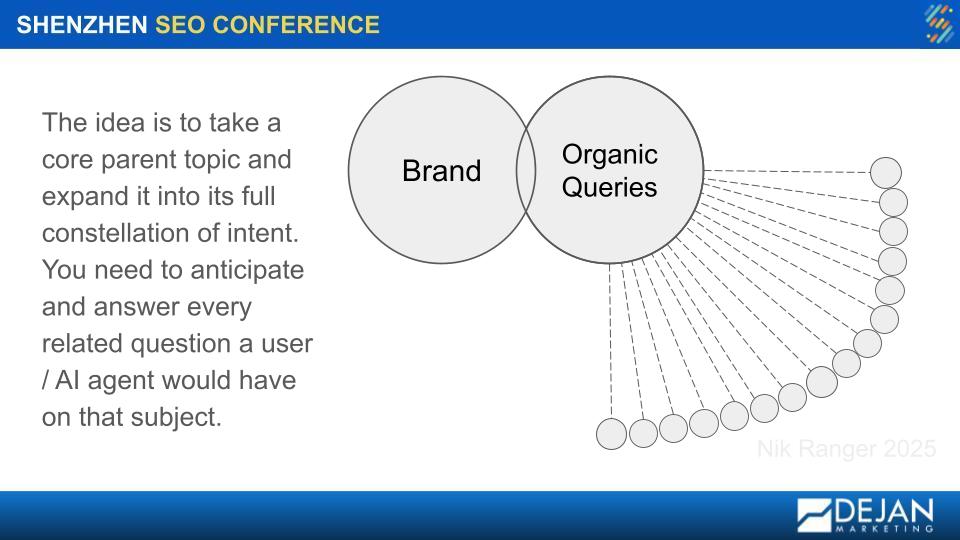

We call this the Query Fan-Out. The idea is to take a core parent topic and expand it into its full constellation of related intents. You need to anticipate and answer every possible question a user or an AI agent might have on that subject. This process can generate millions of synthetic queries, which we can analyse, find patterns, prioritise and depending on what we have available to us with the client, roll out pages that map the most valuable pathways.

This gives us a strategic blueprint for creating single, authoritative pages that serve as the most logical and efficient source for an AI to use, dramatically increasing the chances of being cited.

To understand Query Fan-Out deeper, check out Dan Petrovic‘s post.

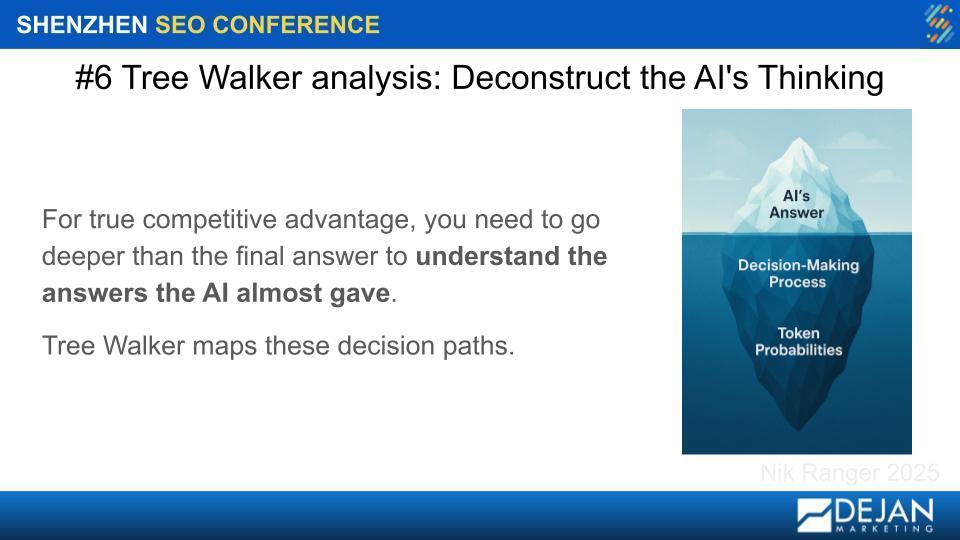

Layer 6: Unpack the AI’s Mind with Tree Walker Analysis

For a true competitive advantage, it’s not enough to see the AI’s final answer, I want to understand the answers it almost gave. It’s important to understand what answers it almost gave because this is where we can analyse the output and decontruct the AI mind’s thought proces. This is what I was referring to earlier – understanding the Agentic Layer.

Our Tree Walker analysis is designed to do exactly this, transforming the AI from an impenetrable black box into a source of clear, data driven essential analytical layer to our work. It’s what helps us prioritise certain topics / entities from the thousands or millions of fan-out results.

The core principle is that when an AI model, whether thats ChatGPT or Gemini (or any other AI model for that matter) generates a sentence, it’s making a statistical choice at every single word / token. It calculates the probability of what should come next based on everything it has learned. Tree Walker maps these decision paths, highlighting where the model is confident and, more importantly, where it hesitates.

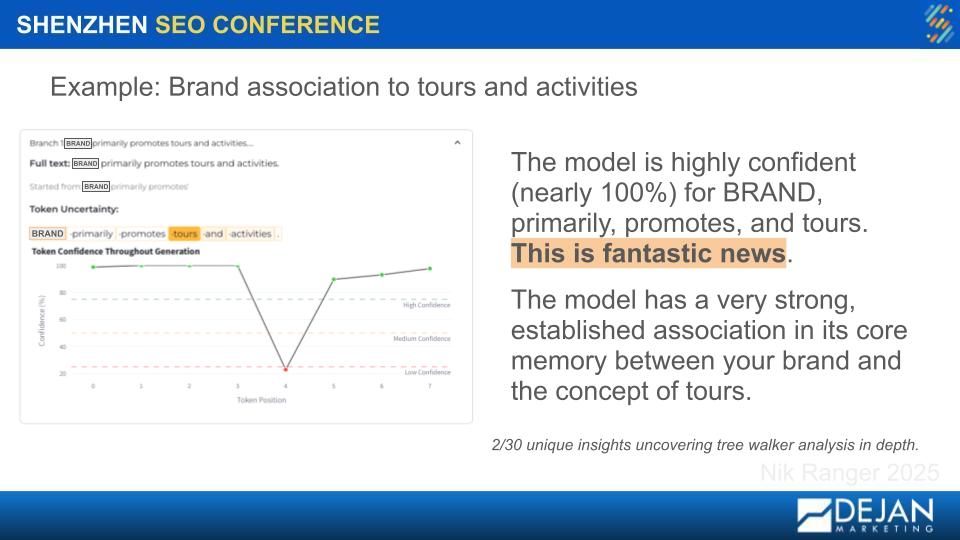

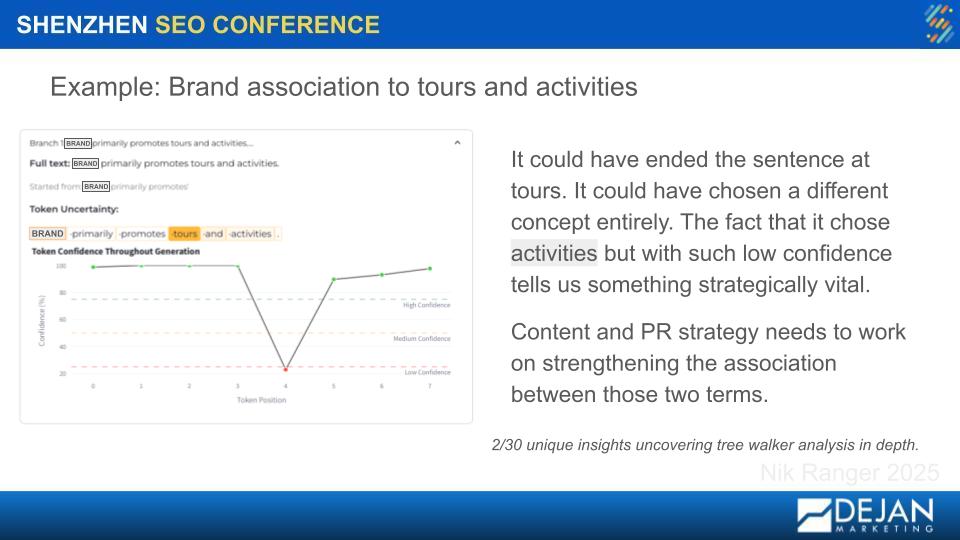

Let’s make this tangible with the example I used in my presentation where I showed a major travel brand whose primary offerings are tours and activities. When we prompted the AI to describe the brand, it generated a sentence, and our Tree Walker analysis visualised its confidence for each token.

- High Confidence Signals Strengths: For the sentence sequence BRAND, primarily, promotes, and tours, the model’s confidence was nearly 100%. This is fantastic news. It confirms a very strong, established association in its core memory between the brand and the concept of tours.

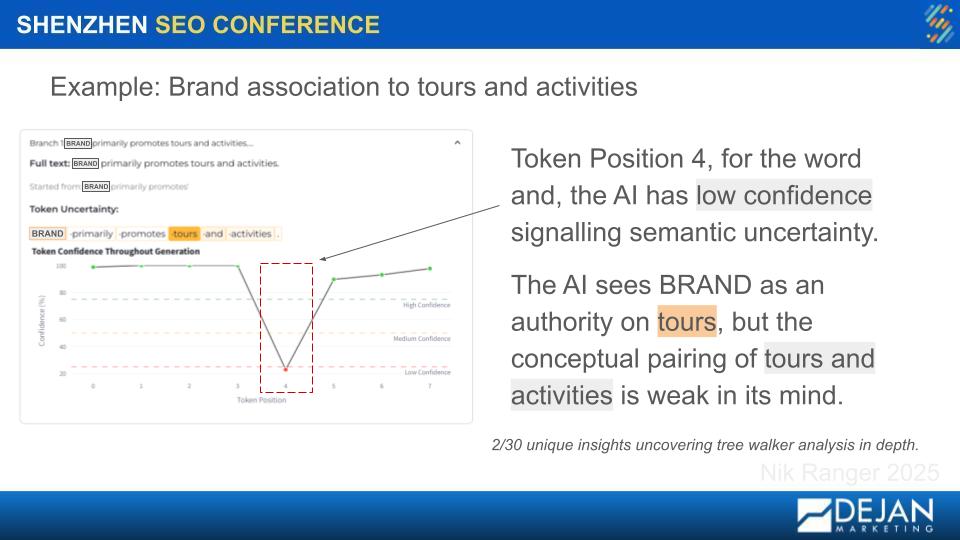

- Low Confidence Reveals Opportunities: Where we see the model’s confidence plummet at token position 4, for the simple word ‘and’, the model’s confidence dropped significantly before it continued with ‘activities.’ This tiny drop is a important strategic insight. It signals semantic uncertainty.

The AI sees the brand as an authority on ‘tours‘, but the conceptual pairing of ‘tours and activities‘ is weak in its mind. It could have ended the sentence at tours, but the fact that it chose activities but with such low confidence tells us something strategically vital.

What’s important to understand here is that it’s a direct indication from the AI that we may need to evaluate the content and branding to work on strengthening the association between our brand and the concept of ‘activities‘. By analysing the runner up tokens the model considered instead, and cross referencing that with the queries / entities / topics that the business wants to be known for, we can uncover a goldmine of related concepts and new content angles.

This allows us to move from broad content campaigns to more precise optimisations, creating content specifically designed to reinforce the weak neural pathways that Tree Walker has revealed, effectively teaching the AI to be more confident about who we are and what we offer.

This low confidence is a massive strategic insight. It tells us that while the AI sees the brand as an authority on tours, the conceptual link between tours and activities is weak in its mind. It could have ended the sentence. It could have chosen a different concept. The fact that it chose activities with such low confidence tells us exactly where we need to focus our content and PR efforts: on strengthening the association between the brand and activities.

To learn more about Tree Walker Analysis, read Dan Petrovic‘s post.

Tying It All Together: Your Blueprint for AI Visibility

I started this whole presentation at Shenzhen with two fundamental questions that I was determined to answer. And I hope by this point, you understand the clear, repeatable process for answering them, or at least knowing where to start and how to think about it. Let’s recap.

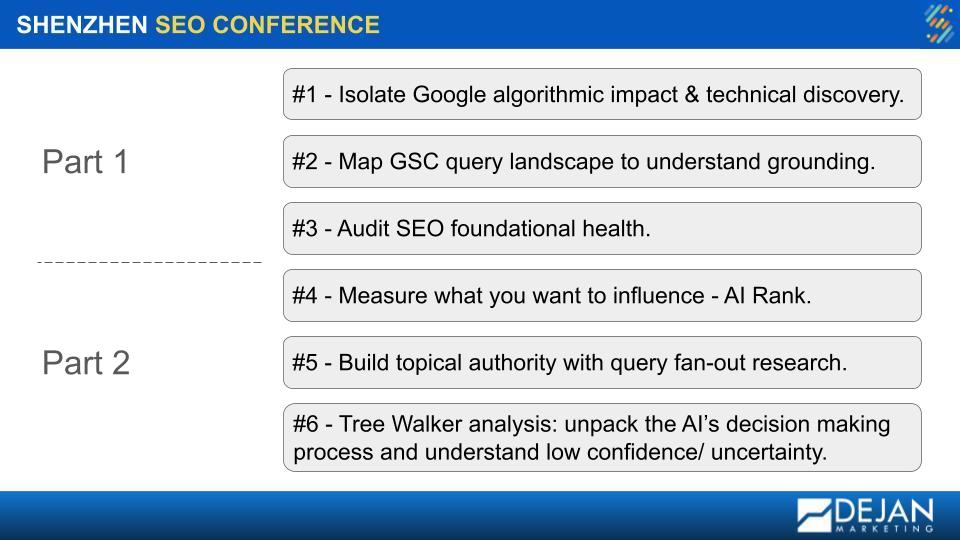

Question 1: Currently, how is my brand being impacted by AI?

Question/Part 1, the diagnostic phase is where we start to truly understand your site, your services/products and most importantly, your target audience.

- 1: Isolate algorithmic impact and technical issues to separate Google’s actions from our own and ensure bots can even access our content.

- 2: Map your GSC query landscape to understand customer journey, patterns and grounding, figuring out exactly where we have the power to influence AI driven results.

- 3: Audit our foundational SEO health to get a complete picture of our strengths, weaknesses, and priotise actions.

Question 2: How can I influence the AI models to recommend my brand?

Question/Part 2, where we move from diagnosis to action, our influence the AI model phase.

- 4: Measure what we want to influence with AI Rank, turning the abstract goal of brand perception into a concrete, trackable KPI.

- 5: Build comprehensive topical authority with query fan-out research, strategically creating the exact content the AI needs to see us as the definitive expert.

- 6: Tree Walker analysis to unpack the AI’s decision making process, finding the points of uncertainty we need to reinforce.

The future of SEO isn’t about choosing between traditional SEO or AI or god forbid, renaming SEO to GEO or any other garbage. It’s about integrating them, because SEO has evolved just like the network of advanced algorithms that govern modern search engines. So our job has evolved to, and we mean to:

- Diagnose your current standing by isolating algorithmic impact, mapping your query landscape, and auditing your foundational health.

- Strategise your influence by measuring your brand perception, building deep topical authority, and analysing the AI’s decision making process.

It’s about building genuine, measurable authority in the mind of the machine.

If you’ve read up to this far, then please follow me on LinkedIn and give me a kudos or better, a recommendation. I really appreciate it. Or if you’d like to have a chat to share your site and what your vision for your brand is, please reach out and let’s chat. Thanks!

Check out the full slide deck here: